MediSens conference to be held in London, UK on Dec 13-14, 2016, announces its agenda:

A brief history of medical imaging

• Imaging in the pre-digital age

• The breakthrough of digital X rays – applications in medical and beyond

• Impact of digitisation on the clinical environment

• Lessons learned by a pioneer in digital X rays

• Some thoughts about the future…

Special Guest Keynote

Delivering an approach driven by clinical need

• Developments and challenges in cancer detection

• Understanding the technical and human challenges for medical imaging

• New techniques towards 3D quantitative imaging to ensure early and improved diagnosis and effective treatment

• Patient-specific diagnosis – Precision Medicine and personalised care

Dr Dimitra Darambara, Team Leader – Multimodality Molecular Imaging, The Institute of Cancer Research

An overview of research and development into molecular imaging

• Understanding the challenges in molecular imaging

• Multi modal imaging: what are the data implications?

• Assessing the advantages and opportunities of molecular imaging for patient groups v cost

• Advantages of PET/SPECT/CT, optical- PET, SPECT-MRI , PET-MRI: which mode has the best clinical potential?

Speaker TBC

Advances in (digital) single-photon detectors for PET

• Single-photon Avalanche Diode (SPAD) fundamentals

• From single SPAD pixels to arrays and systems

• Examples of state-of-the-art SPAD-based PET systems and frontline R&D activity

Dr Claudio Bruschini, Project Manager & Senior Scientific Collaborator, EFPL

Computing Tomography using a proton therapy beam: The Pravda collaboration

• Translation of technology from basic science to medical imaging

• Challenges of imaging proton beams

• Pravda integrated imaging system for proton beam computed tomography

Dr Phil Evans, Professor of Medical Radiation Imaging, Centre of Vision, Speech and Signal Processing, University of Surrey

What’s going on in the world of solid state medical imaging

• Exploring the new wave of innovation focused on CMOS image sensors in the solid state medical imaging market

• Assessing the status of CIS technology and related medical imaging applications

Jérȏme Mouly, Technology & Market Analyst, Yole Developpement

Advances in CMOS Wafer scale imagers for medical imaging

• Clinical needs of x-ray imaging modalities, how they flow down to detector CTQs (critical to quality)

• CMOS detector benefits and challenges (IQ, artefacts, reliability, cost)

• Opportunities for CMOS in medical imaging

Dr Biju Jacob, Senior Engineer, X-ray Detector Development, General Electric

How CMOS can be further leveraged to advance medical imaging

• CMOS Image Sensor (CIS) technology: where it is today

• What can CIS bring to the medical imaging community

• Where is the technology going and how could this impact medical imaging

Dr Renato Turchetta, CEO, Wegapixel

Use of hybrid-organic x ray detectors to improve the specifity and sensitivity of digital flat panel x ray detectors

• X-ray imaging with hybrid organic-inorganic conversion layers

• Scintillator particles embedded in an organic semiconducting matrix

• Digital flat panel X-ray detectors using a “quasi-direct” X-Ray conversion technology

• Hi resolution digital flat panel X-ray detectors

• DiCoMo: Combination of hybrid frontplane and active pixels backplane made of metal oxide TFTs

Dr Sandro Tedde, Senior Key Expert Research Scientist, Siemens Healthcare

Image enhanced endoscopy and the image sensor design for further diagnosis accuracy

• Introduction of technical aspect of image enhanced endoscopy

• Effort for smaller diameter of the endoscopes and high resolution of tiny camera

• Requirements for image sensor design in terms of the high occupancy of the secured pixel area

• High resolution and high S/N ratio

Koichi Mizobuchi, Deputy General Manager, Medical Imaging Technology Department, Olympus Corporation

Advances in cardiac imaging: what it means for the future possibilities of X ray technology

Prof Gary Royle, Professor of Medical Radiation Physics, UCL

Disease diagnosis in the distal lung using time-resolved CMOS single photon detector arrays

• Fibre-based sensing and imaging system allowing minimally invasive diagnostics in the distal lung

• Sensing physiological parameters like pH through exogenous fluorophores or Surface Enhanced Raman Sensors (SERS)

• Application of SPAD sensors to aid in the disambiguation between bacterial fluorescent probes and tissue fluorescent signals

Prof Robert Henderson, Institute of Integrated Micro and Nano Systems, University of Edinburgh

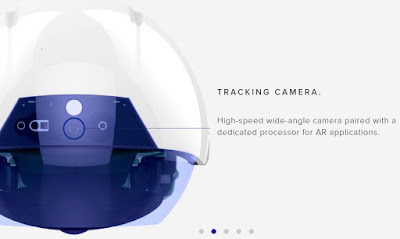

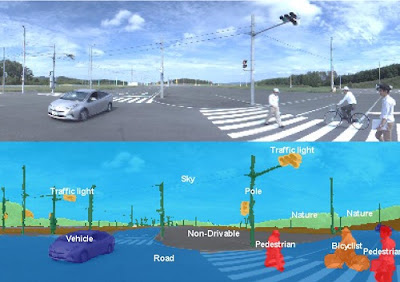

Closing keynote: How robotics is changing the face of surgery

• Understanding the imaging requirements for surgical robots

• Current and future computer vision specifications

• How robotics could impact diagnosis and treatment

Professor Guang-Zhong Yang, Director & Co Founder, Hamlyn Centre of Robotic Surgery & Deputy Chairman, Institute of Global Health Innovation, Imperial College London

Other than the main conference, there is also a workshop by Albert Theuwissen:

First Session:

• CMOS image sensor basics

• Rolling and global shutter

• Ins and Outs of 1-transistor, 3-transistor and 4-transistor pixels

Second session:

• From X-rays to digital numbers

• Stitching to make monolithic large area devices

• Butting to make large imaging arrays

• Binning and averaging

• Yield/cost of large area imagers

A brief history of medical imaging

• Imaging in the pre-digital age

• The breakthrough of digital X rays – applications in medical and beyond

• Impact of digitisation on the clinical environment

• Lessons learned by a pioneer in digital X rays

• Some thoughts about the future…

Special Guest Keynote

Delivering an approach driven by clinical need

• Developments and challenges in cancer detection

• Understanding the technical and human challenges for medical imaging

• New techniques towards 3D quantitative imaging to ensure early and improved diagnosis and effective treatment

• Patient-specific diagnosis – Precision Medicine and personalised care

Dr Dimitra Darambara, Team Leader – Multimodality Molecular Imaging, The Institute of Cancer Research

An overview of research and development into molecular imaging

• Understanding the challenges in molecular imaging

• Multi modal imaging: what are the data implications?

• Assessing the advantages and opportunities of molecular imaging for patient groups v cost

• Advantages of PET/SPECT/CT, optical- PET, SPECT-MRI , PET-MRI: which mode has the best clinical potential?

Speaker TBC

Advances in (digital) single-photon detectors for PET

• Single-photon Avalanche Diode (SPAD) fundamentals

• From single SPAD pixels to arrays and systems

• Examples of state-of-the-art SPAD-based PET systems and frontline R&D activity

Dr Claudio Bruschini, Project Manager & Senior Scientific Collaborator, EFPL

Computing Tomography using a proton therapy beam: The Pravda collaboration

• Translation of technology from basic science to medical imaging

• Challenges of imaging proton beams

• Pravda integrated imaging system for proton beam computed tomography

Dr Phil Evans, Professor of Medical Radiation Imaging, Centre of Vision, Speech and Signal Processing, University of Surrey

What’s going on in the world of solid state medical imaging

• Exploring the new wave of innovation focused on CMOS image sensors in the solid state medical imaging market

• Assessing the status of CIS technology and related medical imaging applications

Jérȏme Mouly, Technology & Market Analyst, Yole Developpement

Advances in CMOS Wafer scale imagers for medical imaging

• Clinical needs of x-ray imaging modalities, how they flow down to detector CTQs (critical to quality)

• CMOS detector benefits and challenges (IQ, artefacts, reliability, cost)

• Opportunities for CMOS in medical imaging

Dr Biju Jacob, Senior Engineer, X-ray Detector Development, General Electric

How CMOS can be further leveraged to advance medical imaging

• CMOS Image Sensor (CIS) technology: where it is today

• What can CIS bring to the medical imaging community

• Where is the technology going and how could this impact medical imaging

Dr Renato Turchetta, CEO, Wegapixel

Use of hybrid-organic x ray detectors to improve the specifity and sensitivity of digital flat panel x ray detectors

• X-ray imaging with hybrid organic-inorganic conversion layers

• Scintillator particles embedded in an organic semiconducting matrix

• Digital flat panel X-ray detectors using a “quasi-direct” X-Ray conversion technology

• Hi resolution digital flat panel X-ray detectors

• DiCoMo: Combination of hybrid frontplane and active pixels backplane made of metal oxide TFTs

Dr Sandro Tedde, Senior Key Expert Research Scientist, Siemens Healthcare

Image enhanced endoscopy and the image sensor design for further diagnosis accuracy

• Introduction of technical aspect of image enhanced endoscopy

• Effort for smaller diameter of the endoscopes and high resolution of tiny camera

• Requirements for image sensor design in terms of the high occupancy of the secured pixel area

• High resolution and high S/N ratio

Koichi Mizobuchi, Deputy General Manager, Medical Imaging Technology Department, Olympus Corporation

Advances in cardiac imaging: what it means for the future possibilities of X ray technology

Prof Gary Royle, Professor of Medical Radiation Physics, UCL

Disease diagnosis in the distal lung using time-resolved CMOS single photon detector arrays

• Fibre-based sensing and imaging system allowing minimally invasive diagnostics in the distal lung

• Sensing physiological parameters like pH through exogenous fluorophores or Surface Enhanced Raman Sensors (SERS)

• Application of SPAD sensors to aid in the disambiguation between bacterial fluorescent probes and tissue fluorescent signals

Prof Robert Henderson, Institute of Integrated Micro and Nano Systems, University of Edinburgh

Closing keynote: How robotics is changing the face of surgery

• Understanding the imaging requirements for surgical robots

• Current and future computer vision specifications

• How robotics could impact diagnosis and treatment

Professor Guang-Zhong Yang, Director & Co Founder, Hamlyn Centre of Robotic Surgery & Deputy Chairman, Institute of Global Health Innovation, Imperial College London

Other than the main conference, there is also a workshop by Albert Theuwissen:

First Session:

• CMOS image sensor basics

• Rolling and global shutter

• Ins and Outs of 1-transistor, 3-transistor and 4-transistor pixels

Second session:

• From X-rays to digital numbers

• Stitching to make monolithic large area devices

• Butting to make large imaging arrays

• Binning and averaging

• Yield/cost of large area imagers