Brigates appears to abandon all its markets, but surveillance and security, as its recent product list suggests:

ZTE Dual Camera Smartphone

ZTE Mobile World Magazine features Axon dual camera smartphone, starting page 16:

AXON has a 13-megapixel camera on the back and a secondary 2-megapixel camera that sits above it to help capture 3D depth for a refocusable photo. To get a better idea of the story behind the challenging work, Mobile World talked with two engineers from ZTE’s camera lab.

Interviewee: Zhu Yufei, responsible for the North American camera team and camera solution planning

Why did you decide to introduce a dual lens camera into AXON? How will the smartphone camera develop in the future?

Dual-lens camera technology is prospective. Photos taken by a single-lens camera are some kind of object simulation, while photos taken by a dual lens camera are more vivid because dual lens cameras capture more information. ...In terms of intelligence, we improve image stabilization and low-light photography through better algorithms and relevant technologies on AXON.

Are there any differences between ZTE’s dual-lens camera and other similar products?

The distance between the two lenses of AXON is wider. AXON outperforms other dual-lens smartphones in the large aperture effect, depth calculation accuracy, and distance of objects from the camera.

What are the trends for future smartphone cameras?

Both single-lens cameras and dual-lens cameras will coexist. Single-lens cameras will dominate in the near future, and dual lens cameras will continue to improve.

Interviewee: Xiao Longan, responsible for the camera software development

What are the tough nuts to crack in introducing the dual-lens camera to AXON? How did you overcome these difficulties?

...No smartphone project at ZTE has required so many human resources on the R&D of the camera app, and no camera app has received so much attention.

Which camera feature do you like most?

Background blur and refocus...

Can you explain more about how the refocus feature works?

The dual-lens camera captures and adds depth data to each object in the image. These data allows you to refocus a picture and add a blur effect to select parts of the image.

AXON has a 13-megapixel camera on the back and a secondary 2-megapixel camera that sits above it to help capture 3D depth for a refocusable photo. To get a better idea of the story behind the challenging work, Mobile World talked with two engineers from ZTE’s camera lab.

Interviewee: Zhu Yufei, responsible for the North American camera team and camera solution planning

Why did you decide to introduce a dual lens camera into AXON? How will the smartphone camera develop in the future?

Dual-lens camera technology is prospective. Photos taken by a single-lens camera are some kind of object simulation, while photos taken by a dual lens camera are more vivid because dual lens cameras capture more information. ...In terms of intelligence, we improve image stabilization and low-light photography through better algorithms and relevant technologies on AXON.

Are there any differences between ZTE’s dual-lens camera and other similar products?

The distance between the two lenses of AXON is wider. AXON outperforms other dual-lens smartphones in the large aperture effect, depth calculation accuracy, and distance of objects from the camera.

What are the trends for future smartphone cameras?

Both single-lens cameras and dual-lens cameras will coexist. Single-lens cameras will dominate in the near future, and dual lens cameras will continue to improve.

Interviewee: Xiao Longan, responsible for the camera software development

What are the tough nuts to crack in introducing the dual-lens camera to AXON? How did you overcome these difficulties?

...No smartphone project at ZTE has required so many human resources on the R&D of the camera app, and no camera app has received so much attention.

Which camera feature do you like most?

Background blur and refocus...

Can you explain more about how the refocus feature works?

The dual-lens camera captures and adds depth data to each object in the image. These data allows you to refocus a picture and add a blur effect to select parts of the image.

Camera Module Report Forecasts Dual Camera Adoption

Korea-based KB Securities analysts come up with a report on camera module market. Few quotes, in a somewhat broken Google translation:

"The camera module shipment growth to 2019 is expected to reach four times the mobile device shipment growth...

The premium smartphone dual (Dual) and 3D, pixels in the low-end phones and increased competition expected with OIS...

Dual Camera module adopted is because it is expected to accelerate in 2016 from this...

Front high pixel camera module, dual camera module, OIS rate mounted on the side as expected the speedy pace..."

"The camera module shipment growth to 2019 is expected to reach four times the mobile device shipment growth...

The premium smartphone dual (Dual) and 3D, pixels in the low-end phones and increased competition expected with OIS...

Dual Camera module adopted is because it is expected to accelerate in 2016 from this...

Front high pixel camera module, dual camera module, OIS rate mounted on the side as expected the speedy pace..."

|

| Mobile devices and camera module shipment forecast |

|

| Pixel mobile camera modules share outlook |

Gpixel Expands its Lineup

Changchun, China-based Gpixel has expanded its image sensor portfolio, now offering 9 standard products for machine vision and industrial applications:

Sony Device Group Reorgs into Three Divisions

Nikkei reports that Sony announces a structural reform and personnel changes that will take effect on Jan 1, 2015 (probably a typo, should be 2016). Sony Device Solution Business Group will be split in 3 organizations: "Automotive Division," "Module Division" and "Product Development Division."

Nikkei sees several reasons for the re-org:

The other reasons for shifting focus from image sensors to camera modules might be an attempt to replicate Sharp camera module success, and an intention to capture a larger chunk of the imaging foodchain.

Nikkei sees several reasons for the re-org:

- The reorg will strengthen Sony image sensor business.

- Sony can better address the fast growing automotive image sensor market where On Semi currently has the largest share.

- By offering modules rather than image sensors, it becomes possible to better support customers that do not have capabilities to use tune and adapt image quality.

The other reasons for shifting focus from image sensors to camera modules might be an attempt to replicate Sharp camera module success, and an intention to capture a larger chunk of the imaging foodchain.

ST Automotive Imaging Solutions

ST publishes a promotional video on its automotive image sensors and image processors solutions:

Update: Upon a closer look, VG6640 image sensor and STV0991 image processor appear to be the new, previously unannounced devices.

Update: Upon a closer look, VG6640 image sensor and STV0991 image processor appear to be the new, previously unannounced devices.

SystemPlus Publishes Fujitsu Iris Recognition Module Teardown

SystemPlus publishes reverse engineering of Fujitsu Iris Authentication Module IR Camera Module & IR LED. The module has been extracted from Fujitsu Arrows NX F-04G smartphone that uses iris scan as the next biometric login technology. Compared to fingerprint sensors, Fujitsu claims that the solution features a faster, safer and more secure authentication. It is also a cost effective solution due to the reuse of standard CIS and LED components. OSRAM is said to be the IR LED manufacturer and has designed this 810nm LED exclusively for this iris scan application (this sounds somewhat contradictory to the re-use statement).

Grand View on LIDAR Market

GlobeNewsWire: Grand View Research estimates the global LiDAR market size at over $260M in 2014. The market is expected to grow to $944.3M by 2022 driven by improved automated processing ability in data processing and image resolution capabilities.

Olympus Visible + IR Stacked Sensor

Nikkei publishes an article on Olympus IEDM 2015 presentation on stacked visible and IR sensor. While it mostly re-iterated the previously published data, some info is new:

"The laminated image sensor is made by combining (1) an image sensor equipped with an RGB color filter for visible light (top layer) and (2) a near-infrared image sensor (bottom layer). Each layer functions as an independent sensor and independently outputs video signals.

The visible-light image sensor is a backside-illuminated type, and its light-receiving layer (made of semiconductor) is as thin as 3μm. Each pixel measures 3.8 x 3.8μm, and the number of pixels is 4,224 x 240."

"The laminated image sensor is made by combining (1) an image sensor equipped with an RGB color filter for visible light (top layer) and (2) a near-infrared image sensor (bottom layer). Each layer functions as an independent sensor and independently outputs video signals.

The visible-light image sensor is a backside-illuminated type, and its light-receiving layer (made of semiconductor) is as thin as 3μm. Each pixel measures 3.8 x 3.8μm, and the number of pixels is 4,224 x 240."

CBS Interviews Graham Townsend, Head of Apple Camera Team

CBS publishes an interview with Apple management team, that includes a part about Apple camera team and its head Graham Townsend:

One of the most complex engineering challenges at Apple involves the iPhone camera, the most used feature of any Apple product. That's the entire camera you're looking at in my hand.

Graham Townsend: There's over 200 separate individual parts in this-- in that one module there.

Graham Townsend is in charge of a team of 800 engineers and other specialists dedicated solely to the camera. He showed us a micro suspension system that steadies the camera when your hand shakes.

Graham Townsend: This whole sus-- autofocus motor here is suspended on four wires. And you'll see them coming in. And here we are. Four-- These are 40-micron wires, less than half a human hair's width. And that holds that whole suspension and moves it in X and Y. So that allows us to stabilize for the hand shake.

In the camera lab, engineers calibrate the camera to perform in any type of lighting.

Graham Townsend: Go to bright bright noon. And there you go. Sunset now. There you go. So, there's very different types of quality of lighting, from a morning, bright sunshine, for instance, the noonday light. And then finally maybe--

CBS: Sunset, dinner--

Graham Townsend: We can simulate all those here. Believe it or not, to capture one image, 24 billion operations go on.

CBS: Twenty-four billion operations going on--

Graham Townsend: Just for one picture--

One of the most complex engineering challenges at Apple involves the iPhone camera, the most used feature of any Apple product. That's the entire camera you're looking at in my hand.

Graham Townsend: There's over 200 separate individual parts in this-- in that one module there.

Graham Townsend is in charge of a team of 800 engineers and other specialists dedicated solely to the camera. He showed us a micro suspension system that steadies the camera when your hand shakes.

Graham Townsend: This whole sus-- autofocus motor here is suspended on four wires. And you'll see them coming in. And here we are. Four-- These are 40-micron wires, less than half a human hair's width. And that holds that whole suspension and moves it in X and Y. So that allows us to stabilize for the hand shake.

In the camera lab, engineers calibrate the camera to perform in any type of lighting.

Graham Townsend: Go to bright bright noon. And there you go. Sunset now. There you go. So, there's very different types of quality of lighting, from a morning, bright sunshine, for instance, the noonday light. And then finally maybe--

CBS: Sunset, dinner--

Graham Townsend: We can simulate all those here. Believe it or not, to capture one image, 24 billion operations go on.

CBS: Twenty-four billion operations going on--

Graham Townsend: Just for one picture--

Image Sensors in 13 min

Filmmaker IQ publishes a short video lecture on image sensors. While not perfectly accurate in some parts, it's amazing how much info one can squeeze in a 13 min video:

Yole Interviews Heimann Sensor CEO

Yole publishes an interview with a Heimann Sensor CEO Joerg Schieferdecker. Heimann Sensor is a 14-year old German IR image sensor manufacturer. Schieferdecker talks about a number of emerging thermal IR imaging applications, such a contactless thermometer embedded into a mobile phone:

"We are working to develop such a spot thermometer within Heimann Sensor. Many players in the mobile phone business are looking at these devices but we think that two more years of development are needed in order to get them working. First, single pixel sensors will be used and then arrays will be introduced into the market. The remaining issues are significant. For example, getting a compact device, within a thin ‘z budget’, is very challenging and needs further development at device and packaging level. In the same way, heat shock resistance is a challenge at the moment.

The applications of remote temperature sensing are just huge. Fever measurement, checking outdoor temperatures from your mobile phone, water temperature measurement for a baby’s bath or bottle - the list is endless. Most require 1 or 2°C accuracy, which is already available, while body temperature needs further development to reach 0.2°C accuracy.

We expect to see the first mobile phone with this feature around Christmas 2017."

"We are working to develop such a spot thermometer within Heimann Sensor. Many players in the mobile phone business are looking at these devices but we think that two more years of development are needed in order to get them working. First, single pixel sensors will be used and then arrays will be introduced into the market. The remaining issues are significant. For example, getting a compact device, within a thin ‘z budget’, is very challenging and needs further development at device and packaging level. In the same way, heat shock resistance is a challenge at the moment.

The applications of remote temperature sensing are just huge. Fever measurement, checking outdoor temperatures from your mobile phone, water temperature measurement for a baby’s bath or bottle - the list is endless. Most require 1 or 2°C accuracy, which is already available, while body temperature needs further development to reach 0.2°C accuracy.

We expect to see the first mobile phone with this feature around Christmas 2017."

Next-Gen Tensilica Vision Processor IP

BDTI publishes an article on the next generation Cadence Tensilica Vision P5 Processor IP featuring lower power and higher speed:

The base P5 core is said to be less than 2 mm2 in size in a 16nm process, with minor additional area increments for the optional FPU and cache and instruction memories. Lead customers have been evaluating and designing in Vision P5 for several months, and the core is now available for general licensing.

The base P5 core is said to be less than 2 mm2 in size in a 16nm process, with minor additional area increments for the optional FPU and cache and instruction memories. Lead customers have been evaluating and designing in Vision P5 for several months, and the core is now available for general licensing.

Article About Caeleste

Belgian local DSP Valley Newsletter publishes an article about Caeleste (see page 6). Few quotes:

"Caeleste was created in December 2006, by a few people around the former CTO of Fillfactory/Cypress. After an organic growth start, Caeleste is now 22 persons large and fast growing with a CAGR of 30-50%. It is specialized in the design and supply of custom specific CMOS Image Sensors (CIS). Caeleste is still 100% owned by its founders.

...In fact, Caeleste has almost the largest CIS design team in Europe, exclusively devoted to customer specific CMOS image sensors."

Some of Caeleste R&D projects:

"Caeleste was created in December 2006, by a few people around the former CTO of Fillfactory/Cypress. After an organic growth start, Caeleste is now 22 persons large and fast growing with a CAGR of 30-50%. It is specialized in the design and supply of custom specific CMOS Image Sensors (CIS). Caeleste is still 100% owned by its founders.

...In fact, Caeleste has almost the largest CIS design team in Europe, exclusively devoted to customer specific CMOS image sensors."

Some of Caeleste R&D projects:

- Caeleste has the world record in low noise imaging: We have proven a noise limit of 0.34 e-rms; this implies that under low flux conditions (e.g. in astronomy) each and every photon can be counted and that the background is completely black, provided that the dark current is low enough.

- Caeleste has also designed a dual color X-ray imager, which allows a much better discrimination and diagnosis of cancerous tissue than the conventional grey scale images.

- Caeleste has also its own patents for 3D imaging, based on Time of Flight (ToF) operation. This structure allows the almost noise free accumulation of multiple laser pulses to enable accurate distance measurements at long distance or with weak laser sources.

TowerJazz CIS Business Overview

Oppenheimer publishes December 14, 2015 report on TowerJazz business talking about the CIS part of it, among many other things:

"CMOS Image Sensors (CIS). Approximately 15% of sales, ex-Panasonic. Tower offers advanced CMOS image sensor technology for use in the automotive, industrial, medical, consumer, and high-end photography markets. Tower estimates the silicon portion of the CMOS image sensor market to be a ~$10B TAM, with ~$3B potentially being served via foundry offerings. Today, roughly two-thirds of this market serves the cellular/smartphone camera market; however, the migration to image-based communication across the automotive, industrial, security and IoT markets is expanding the applications for Tower's CIS offerings. Tower is tracking toward 35% Y/Y growth in this segment in 2015, outpacing the industry's 9% compounded growth rate, as the company has gained traction in areas previously not served by specialty foundries.

Tower has IP related to highly customized pixels, which lend the technology to a wide variety of applications. We see opportunities in automotive, security/surveillance, medical imaging, and 3D gesture control driving sustainable growth in this segment long term."

"CMOS Image Sensors (CIS). Approximately 15% of sales, ex-Panasonic. Tower offers advanced CMOS image sensor technology for use in the automotive, industrial, medical, consumer, and high-end photography markets. Tower estimates the silicon portion of the CMOS image sensor market to be a ~$10B TAM, with ~$3B potentially being served via foundry offerings. Today, roughly two-thirds of this market serves the cellular/smartphone camera market; however, the migration to image-based communication across the automotive, industrial, security and IoT markets is expanding the applications for Tower's CIS offerings. Tower is tracking toward 35% Y/Y growth in this segment in 2015, outpacing the industry's 9% compounded growth rate, as the company has gained traction in areas previously not served by specialty foundries.

Tower has IP related to highly customized pixels, which lend the technology to a wide variety of applications. We see opportunities in automotive, security/surveillance, medical imaging, and 3D gesture control driving sustainable growth in this segment long term."

|

| Oppenheimer: "We expect image sensor growth to outpace the overall optoelectronics segment, and forecast growth at a 6% CAGR from 2014 to 2019 to approximately $13.3B, from $10.4B in 2014" |

Mobileye vs George Hotz

Bloomberg publishes an article about famous 24-year old hacker George Hotz making a self-driving car in his garage based on 6 cameras and a LIDAR. Hotz says that his technology is much better than one of Mobileye used in Tesla Model S: “It’s absurd,” Hotz says of Mobileye. “They’re a company that’s behind the times, and they have not caught up.”

Mobileye spokesman Yonah Lloyd denies that the company’s technology is outdated, “Our code is based on the latest and modern AI techniques using end-to-end deep network algorithms for sensing and control.”

SeekingAlpha reports that Tesla comes to Mobileye's rescue. "We think it is extremely unlikely that a single person or even a small company that lacks extensive engineering validation capability will be able to produce an autonomous driving system that can be deployed to production vehicles," says Tesla. "[Such a system] may work as a limited demo on a known stretch of road -- Tesla had such a system two years ago -- but then requires enormous resources to debug over millions of miles of widely differing roads. This is the true problem of autonomy: getting a machine learning system to be 99% correct is relatively easy, but getting it to be 99.9999% correct, which is where it ultimately needs to be, is vastly more difficult. Going forward, we will continue to use the most advanced component technologies, such as Mobileye’s vision chip, in our vehicles. Their part is the best in the world at what it does and that is why we use it."

Mobileye spokesman Yonah Lloyd denies that the company’s technology is outdated, “Our code is based on the latest and modern AI techniques using end-to-end deep network algorithms for sensing and control.”

SeekingAlpha reports that Tesla comes to Mobileye's rescue. "We think it is extremely unlikely that a single person or even a small company that lacks extensive engineering validation capability will be able to produce an autonomous driving system that can be deployed to production vehicles," says Tesla. "[Such a system] may work as a limited demo on a known stretch of road -- Tesla had such a system two years ago -- but then requires enormous resources to debug over millions of miles of widely differing roads. This is the true problem of autonomy: getting a machine learning system to be 99% correct is relatively easy, but getting it to be 99.9999% correct, which is where it ultimately needs to be, is vastly more difficult. Going forward, we will continue to use the most advanced component technologies, such as Mobileye’s vision chip, in our vehicles. Their part is the best in the world at what it does and that is why we use it."

PMD and Infineon Present Improved ToF Sensors

Infineon and pmd unveil their latest 3D image sensor chip of the REAL3 family. Compared to the previous version, the optical sensitivity as well as the power consumption of the new 3D sensors has been improved and the built-in electronics take up little space. The chips make it possible for cell phones to operate mini-camera systems that can measure 3D depth data.

The optical pixel sensitivity of the new 3D image sensors is now double that of the previous version. This means that their measurement quality is just as good, while at the same time working with only half of the emitted light output and the camera’s system power consumption is almost halved. The improved optical pixel sensitivity is the result of applying one microlens to each of the pixels of the 3D image sensor chip.

The new 3D image sensor chips were specifically designed for mobile devices, where most applications only need a resolution of 38,000 pixels. The previous 100,000-pixel matrix was accordingly scaled down, and other functional blocks, such as the analog/digital converter for the chip area and performance range were optimized. Thus, the system cost is lower: the sensor chip area is almost halved, and, because of the lower resolution, smaller and less expensive optical lenses can be used.

The three new REAL3 3D image sensors differ in their resolutions: the IRS1125C works with 352 x 288 pixels, the IRS1645C with 224 x 172 pixels and the IRS1615C with 160 x 120 pixels. In this respect, the IRS1645C and IRS1615C are produced on half the chip area of the IRS1125C.

Google’s “Project Tango” using Infineon's IRS1645C 3D image sensor chip. The complete 3D camera for Google “Tango” – consisting of IRS1645C and an active infrared laser illumination – is housed in an area of approximately 10 mm x 20 mm. With a range up to 4 meters (13 feet), a measuring accuracy of 1% of the distance and a frame rate of 5 fps, the 3D camera subsystem consumes less than 300 mW in active mode.

The IRS1125C will be available in volume in Q1 2016. The start of production for the smaller IRS1645C and IRS1615C is planned for Q2 2016. All three types are exclusively delivered as a bare die to allow maximum design flexibility while minimizing system costs.

The optical pixel sensitivity of the new 3D image sensors is now double that of the previous version. This means that their measurement quality is just as good, while at the same time working with only half of the emitted light output and the camera’s system power consumption is almost halved. The improved optical pixel sensitivity is the result of applying one microlens to each of the pixels of the 3D image sensor chip.

The new 3D image sensor chips were specifically designed for mobile devices, where most applications only need a resolution of 38,000 pixels. The previous 100,000-pixel matrix was accordingly scaled down, and other functional blocks, such as the analog/digital converter for the chip area and performance range were optimized. Thus, the system cost is lower: the sensor chip area is almost halved, and, because of the lower resolution, smaller and less expensive optical lenses can be used.

The three new REAL3 3D image sensors differ in their resolutions: the IRS1125C works with 352 x 288 pixels, the IRS1645C with 224 x 172 pixels and the IRS1615C with 160 x 120 pixels. In this respect, the IRS1645C and IRS1615C are produced on half the chip area of the IRS1125C.

Google’s “Project Tango” using Infineon's IRS1645C 3D image sensor chip. The complete 3D camera for Google “Tango” – consisting of IRS1645C and an active infrared laser illumination – is housed in an area of approximately 10 mm x 20 mm. With a range up to 4 meters (13 feet), a measuring accuracy of 1% of the distance and a frame rate of 5 fps, the 3D camera subsystem consumes less than 300 mW in active mode.

The IRS1125C will be available in volume in Q1 2016. The start of production for the smaller IRS1645C and IRS1615C is planned for Q2 2016. All three types are exclusively delivered as a bare die to allow maximum design flexibility while minimizing system costs.

CCD vs CMOS: Smear

Adimec publishes a nice demo of smear in CCDs and virtual absence of it in CMOS sensors. The smear manifests itself in:

- Contrast reduction of the image

- Questionable patterns depending on image contents

- Higher noise level in the dark areas of the image

- The three effects above combined: degradation of DRI (detection, recognition, identification)

- For color: a shift of white balance, depending on the smear level

2015 Electronic Imaging Symposium Presentations

IS&T Electronic Imaging (EI) Symposium publishes a number of interesting presentations, such as "A novel optical design for light field acquisition using camera array:"

"3D UHDTV contents production with 2/3 inch sensor cameras:"

"3D UHDTV contents production with 2/3 inch sensor cameras:"

TechInsights iPhone 6s, 6s Plus 12MP Sensor Report

TechInsights announces a reverse engineering report of 12MP Sony sensor from Apple iPhone 6s and 6s Plus, to be published on Dec 22, 2015:

"The Sony 12 megapixel CMOS image sensor is fabricated using a 4 metal (Cu), 90 nm CMOS image sensor process. The backside illuminated die is wafer bonded to an underling control ASIC using through silicon vias (TSVs) to provide die-to-die interconnections. The image sensor features a 1.22 µm large pixel with RGB color filters and a single layer of microlenses. Four pixels share a common readout circuit comprising four transfer gates, a reset gate, select gate and source follower in a 1.75T architecture."

"The Sony 12 megapixel CMOS image sensor is fabricated using a 4 metal (Cu), 90 nm CMOS image sensor process. The backside illuminated die is wafer bonded to an underling control ASIC using through silicon vias (TSVs) to provide die-to-die interconnections. The image sensor features a 1.22 µm large pixel with RGB color filters and a single layer of microlenses. Four pixels share a common readout circuit comprising four transfer gates, a reset gate, select gate and source follower in a 1.75T architecture."

IISW 2017 Time and Location Announced

2017 International Image Sensor Workshop (IISW) announces its time and location. The workshop is to be held on May 30th – June 2nd, 2017 in Hiroshima, Japan. The Grand Prince Hotel Hiroshima is located on a small island in south Hiroshima with a nice archipelago view from the hotel. Miyajima and the downtown Hiroshima, which are UNESCO world heritages, are within the reach and are good excursion candidates.

TI Unveils ToF SoC

TI releases its first SoC for 3D ToF imaging integrating ToF Sensor, AFE, Timing Generator, and Depth Engine on a single chip. While the OPT8320 chip is based on 30um sized Softkinetic pixel technology, it's made by TI and most of the IP is developed in-house in TI. TI publishes a detailed datasheet with example applications, and compares it with a older non-integrated ToF imager:

Rumor: Microsoft Lumia 1050 Smartphone to Feature 50MP Sensor

JBHNews quotes a rumor that Microsoft Lumia 1050 smartphone, to be released in January or February 2016, would feature 50MP camera having a variable aperture, a zoom lens, an OIS and a laser AF. "Overall, Lumia 1050 would be a defining phone in the history of Microsoft."

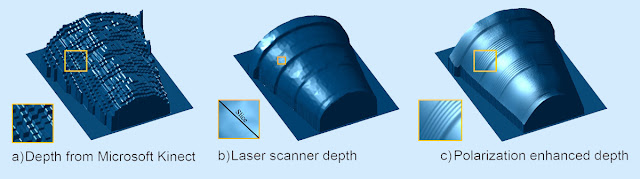

MIT Proposes to Use Polarization to Improve 3D Imaging

Optics.org: Researchers from MIT media lab (Prof. Ramesh Raskar) claim that exploiting the polarization of light allows them increasing the resolution of conventional 3-D imaging devices by as much as 1,000 times. The new 3D imaging system, which they call Polarized 3D, in a paper they are presenting at the International Conference on Computer Vision in Santiago, Chile, on December 11-18, 2015:

"Today, 3-D cameras can be miniaturized to fit on cellphones,” said Achuta Kadambi, a PhD student in the MIT Media Lab and one of the system’s developers. “But this involves making compromises to the 3-D sensing, leading to very coarse recovery of observed geometry. That is a natural application for polarization, because you can still use a low-quality sensor, but by adding a polarizing filter gives a result quality that is better than from many machine-shop laser scanners.”

Polarization affects the way in which light bounces off of physical objects. Light within a certain range of polarizations is more likely to be reflected. So the polarization of reflected light carries information about the geometry of the objects it has struck. Microsoft Kinect is used for a coarse depth estimation, while polarization helps to interpolate and improve its depth accuracy.

“The work fuses two 3-D sensing principles, each having pros and cons,” commented Yoav Schechner, an associate professor of electrical engineering at Technion — Israel Institute of Technology. “One principle provides the range for each scene pixel: This is the state of the art of most 3-D imaging systems. The second principle does not provide range. On the other hand, it derives the object slope, locally. In other words, per scene pixel, it tells how flat or oblique the object is.”

Thanks to AG for the link!

"Today, 3-D cameras can be miniaturized to fit on cellphones,” said Achuta Kadambi, a PhD student in the MIT Media Lab and one of the system’s developers. “But this involves making compromises to the 3-D sensing, leading to very coarse recovery of observed geometry. That is a natural application for polarization, because you can still use a low-quality sensor, but by adding a polarizing filter gives a result quality that is better than from many machine-shop laser scanners.”

Polarization affects the way in which light bounces off of physical objects. Light within a certain range of polarizations is more likely to be reflected. So the polarization of reflected light carries information about the geometry of the objects it has struck. Microsoft Kinect is used for a coarse depth estimation, while polarization helps to interpolate and improve its depth accuracy.

“The work fuses two 3-D sensing principles, each having pros and cons,” commented Yoav Schechner, an associate professor of electrical engineering at Technion — Israel Institute of Technology. “One principle provides the range for each scene pixel: This is the state of the art of most 3-D imaging systems. The second principle does not provide range. On the other hand, it derives the object slope, locally. In other words, per scene pixel, it tells how flat or oblique the object is.”

Thanks to AG for the link!

Yole Report on Autonomous Vehicles

Yole Developpement research "Sensors and Data Management for Autonomous Vehicles report 2015" shows the roadmap from manned driving to "feet off" to "hands off" to "mind off" and the sensors needed for that:

"Currently, the most advanced commercial car with autonomous features embeds in the order of 17 sensors. Yole anticipates 29+ sensors by 2030. Today, two sensor businesses dominate: ultrasonic sensors and cameras for surround. Together they are worth a $2.4B market value, but ultrasonic sensors comprise 85% of current market volume. By 2030, cameras for surround will lead the market at $12B, followed by ultrasound sensors and long-range radar ($8.7B and $7.9B respectively), and then short-range radar ($5B). Overall 2030 market value is estimated at $36B.

All of these evolutions will likely lead to mass-market adoption of semi-autonomous vehicles by 2030. We expect more than 22M units (18% of all vehicles sold) of level-2 vehicles and 10M units of level-3 vehicles by 2030, along with 38M units of level-2 vehicles and 1M units of Level-4 vehicles."

"Currently, the most advanced commercial car with autonomous features embeds in the order of 17 sensors. Yole anticipates 29+ sensors by 2030. Today, two sensor businesses dominate: ultrasonic sensors and cameras for surround. Together they are worth a $2.4B market value, but ultrasonic sensors comprise 85% of current market volume. By 2030, cameras for surround will lead the market at $12B, followed by ultrasound sensors and long-range radar ($8.7B and $7.9B respectively), and then short-range radar ($5B). Overall 2030 market value is estimated at $36B.

All of these evolutions will likely lead to mass-market adoption of semi-autonomous vehicles by 2030. We expect more than 22M units (18% of all vehicles sold) of level-2 vehicles and 10M units of level-3 vehicles by 2030, along with 38M units of level-2 vehicles and 1M units of Level-4 vehicles."

High Speed Bullet Photography

Odos Imaging CEO Chris Yates publishes an article in Vision Systems Design showing its high speed camera capabilities in shooting a bullet:

|

| Camera measures the bullet deceleration due to the air drag |

AR Startup Magic Leap Raises $827M on Valuation of $3.7B

WSJ, Forbes, The Verge: Augmented-reality startup Magic Leap could raise up to $827 million in a new funding round. This would bring Magic Leap’s total funding to about $1.4 billion. If Magic Leap raises the full amount, it could be valued at about $3.7 billion, according to venture-capital data firm VC Experts. The previous investments came from Google, Qualcomm Ventures, KKR, Vulcan Capital, KPCB, Andreesen Horowtiz, Obvious Ventures and others.

“We’re fundamentally a new kind of lightfield chip to enable new experiences,” said Magic Leap founder and CEO Rony Abovitz at a conference in June. “There’s no off-the-shelf stuff used. That’s the reason for the amount of capital we’ve raised — to go to the moon.” Abovitz also released Magic Leap’s SDK and said the company was building 260,000 sq. ft. manufacturing facility in Florida to develop components for the device.

“We’re fundamentally a new kind of lightfield chip to enable new experiences,” said Magic Leap founder and CEO Rony Abovitz at a conference in June. “There’s no off-the-shelf stuff used. That’s the reason for the amount of capital we’ve raised — to go to the moon.” Abovitz also released Magic Leap’s SDK and said the company was building 260,000 sq. ft. manufacturing facility in Florida to develop components for the device.

Pixpolar Receives EC Seal of Excellence Award

Pixpolar was awarded European Commission’s Seal of Excellence. Certificate delivered by the European Commission,as the institution managing Horizon 2020, the EU Framework Program for Research and Innovation 2014-2020. Following evaluation by an international panel of independent experts Pixpolar Oy was successful as an innovative project proposal.

A year ago, Pixpolar Oy received the funding from the first round of Horizon 2020 Small and Medium-sized Enterprises (SME) Instrument funding from EU for Phase 1 projects. In this round, the instrument received in total 2602 eligible project proposals, out of which 6% received funding. The toughest competition was within the Open Disruptive Innovation (Information and Communication Technologies) topic, where 3% of 886 eligible proposals received the funding. Pixpolar Oy was the only successful applicant from Finland under the Open Disruptive Innovation topic.

Also a year ago, Pixpolar Oy has received a support from TEKES (the Finnish Funding Agency for Technology and Innovation) for a demonstrator project.

A year ago, Pixpolar Oy received the funding from the first round of Horizon 2020 Small and Medium-sized Enterprises (SME) Instrument funding from EU for Phase 1 projects. In this round, the instrument received in total 2602 eligible project proposals, out of which 6% received funding. The toughest competition was within the Open Disruptive Innovation (Information and Communication Technologies) topic, where 3% of 886 eligible proposals received the funding. Pixpolar Oy was the only successful applicant from Finland under the Open Disruptive Innovation topic.

Also a year ago, Pixpolar Oy has received a support from TEKES (the Finnish Funding Agency for Technology and Innovation) for a demonstrator project.

Softkinetic Receives Vrije University Award

Vrije Universiteit Brussel awards its first Certificate of Appreciation to Optrima/SoftKinetic, a 3D Sensor company and a very successful VUB spin-off. The award is a token of recognition of the contribution, quality work, and dedication in promoting and valorising VUB's technological excellence around the globe. In October 2015, Sony announced the acquisition of SoftKinetic Systems.

SoftKinetic originated from a merger of VUB spin-off Optrima and SoftKinetic in 2011. Prof. Maarten Kuijk (ETRO), who founded Optrima with four of his PhD students, stood at the origin of SoftKinetic's groundbreaking 3D technology.

SoftKinetic originated from a merger of VUB spin-off Optrima and SoftKinetic in 2011. Prof. Maarten Kuijk (ETRO), who founded Optrima with four of his PhD students, stood at the origin of SoftKinetic's groundbreaking 3D technology.

ON Semi Presents Automotive Imaging Applications

ON Semi publishes a 15 min-long video on CMOS image sensor applications in automotive imaging. Unfortunately, only Japanese version is available:

Seeing Around Corners

Extreme Lighting Group of Heriot-Watt University explains how one can see around corners with a help of very fast cameras:

2016 Image Sensors Europe Agenda Announced

2016 Image Sensors Conference to be held on March 16-17 in London, UK, announces its agenda:

Photon Counting Without Avalanche Multiplication - Progress on the Quanta Image Sensor

Eric Fossum, Professor of Thayer School of Engineering at Dartmouth

Opportunities and Differentiation on Image Sensor Market

Vladimir Koifman, Chief Technology Officer of Analog Value

Present and Future Trends in Silicon Imagers for Non-Visible Imaging and Instrumentation

Bart Dierickx, Founder and CEO of Caeleste

Challenges for Scene Understanding in Automotive Environment

Anna Gaszczak, Research Lead Engineer of Jaguar Land Rover

The On-Chip-Optics for Future Optical Sensor

JC Hsieh, Associate Vice President of R&D of VisEra

Security Imaging

Anders Johannesson, Senior Expert - Imaging Research and Development, Axis Communications

Bayer Pattern and Image Quality

Jörg Kunze, Team Leader PreDevelopment of Basler

QuantumFilm: A New Way To Capture Light

Emanuele Mandelli, Vice President of Engineering of InVisage

CIS for High-End Niche Applications

Guy Meynants, CTO of CMOSIS

High Speed Image Sensors

Wilfried Uhring, Professor of ICube Laboratory - University of Strasbourg

Overview of the State of the Art Event Driven Sensors

Tobi Delbruck, Professor of Institute of Neuroinformatics

Minimal form factor camera modules for medical endoscopic and speciality imaging applications

Martin Waeny, CEO of AWAIBA

Challenges for Future Medical Endoscopic Imaging

Nana Akahane, Senior Supervisor - Medical Imaging Technology Department of Olympus Corporation

The Development of High Resolution Scintillators for X-ray Flat Panel Sensors

Simon Whitbread, Technology Specialist of Hamamatsu Photonics

Organic and Hybrid Photodetectors for Medical X-Ray Imaging

Sandro Tedde, Senior Key Expert Research Scientist of Siemens Healthcare

Broadcaster's Future Requirements for Ultra-High Definition TV

Richard Salmon, Lead Research Engineer of BBC Research & Development

Broadcasting and Pixilation

Peter Centen, Director R&D Cameras of Grass Valley

TBC

Simon Ji - LG Electronics

Bio-inspired Method Addresses Speed, DR and Power Efficiency Limitations of Image Sensors

Christoph Posch, Chief Technical Officer of Chronocam

New Business Models and Offers in Imaging Doesn't Prevent High Level of Innovation

Philippe Rommeveaux, President and CEO, Pyxalis

Photon Counting Without Avalanche Multiplication - Progress on the Quanta Image Sensor

Eric Fossum, Professor of Thayer School of Engineering at Dartmouth

Opportunities and Differentiation on Image Sensor Market

Vladimir Koifman, Chief Technology Officer of Analog Value

Present and Future Trends in Silicon Imagers for Non-Visible Imaging and Instrumentation

Bart Dierickx, Founder and CEO of Caeleste

Challenges for Scene Understanding in Automotive Environment

Anna Gaszczak, Research Lead Engineer of Jaguar Land Rover

The On-Chip-Optics for Future Optical Sensor

JC Hsieh, Associate Vice President of R&D of VisEra

Security Imaging

Anders Johannesson, Senior Expert - Imaging Research and Development, Axis Communications

Bayer Pattern and Image Quality

Jörg Kunze, Team Leader PreDevelopment of Basler

QuantumFilm: A New Way To Capture Light

Emanuele Mandelli, Vice President of Engineering of InVisage

CIS for High-End Niche Applications

Guy Meynants, CTO of CMOSIS

High Speed Image Sensors

Wilfried Uhring, Professor of ICube Laboratory - University of Strasbourg

Overview of the State of the Art Event Driven Sensors

Tobi Delbruck, Professor of Institute of Neuroinformatics

Minimal form factor camera modules for medical endoscopic and speciality imaging applications

Martin Waeny, CEO of AWAIBA

Challenges for Future Medical Endoscopic Imaging

Nana Akahane, Senior Supervisor - Medical Imaging Technology Department of Olympus Corporation

The Development of High Resolution Scintillators for X-ray Flat Panel Sensors

Simon Whitbread, Technology Specialist of Hamamatsu Photonics

Organic and Hybrid Photodetectors for Medical X-Ray Imaging

Sandro Tedde, Senior Key Expert Research Scientist of Siemens Healthcare

Broadcaster's Future Requirements for Ultra-High Definition TV

Richard Salmon, Lead Research Engineer of BBC Research & Development

Broadcasting and Pixilation

Peter Centen, Director R&D Cameras of Grass Valley

TBC

Simon Ji - LG Electronics

Bio-inspired Method Addresses Speed, DR and Power Efficiency Limitations of Image Sensors

Christoph Posch, Chief Technical Officer of Chronocam

New Business Models and Offers in Imaging Doesn't Prevent High Level of Innovation

Philippe Rommeveaux, President and CEO, Pyxalis

Panasonic Resumes Image Sensor R&D

Nikkei reports that Panasonic is resuming image sensor development after freezing it for the last few years, aiming for applications including 8K UHDTV applications. The company plans to spend about 10b yen ($80.8M) to develop next-generation 8K sensors, with plans to release them in fiscal 2018.

Initially, Panasonic will use the new image sensors in its own consumer and broadcasting cameras. Later on, it intends to target broader applications including self-driving cars and surveillance, and may sell the sensors to other companies for smartphone and other applications. Production will likely be outsourced.

Panasonic had frozen image sensor development since its disastrous fiscal 2011. As earnings sharply picked up, Panasonic apparently decided that developing its own key image-processing components is essential to gaining a competitive in edge in imaging products.

TSR estimates the global image sensor market coming to 1.2 trillion yen in 2015. Sensors that support UHD imaging are seen as a growth area.

Initially, Panasonic will use the new image sensors in its own consumer and broadcasting cameras. Later on, it intends to target broader applications including self-driving cars and surveillance, and may sell the sensors to other companies for smartphone and other applications. Production will likely be outsourced.

Panasonic had frozen image sensor development since its disastrous fiscal 2011. As earnings sharply picked up, Panasonic apparently decided that developing its own key image-processing components is essential to gaining a competitive in edge in imaging products.

TSR estimates the global image sensor market coming to 1.2 trillion yen in 2015. Sensors that support UHD imaging are seen as a growth area.

CMOSIS Acquisition Completed

ams announces the completion of the transaction to acquire CMOSIS.

Sony and Toshiba Sign Definitive Agreement on Image Sensor Business Transfer

Based on MOU on October 28, 2015, Sony and Toshiba have signed definitive agreements to transfer to Sony and to Sony Semiconductor Corporation ("SCK"), a wholly-owned subsidiary of Sony, Toshiba fab facilities, equipment and related assets in its Oita Operations facility, as well as other related equipment and assets owned by Toshiba.

Toshiba will transfer facilities, equipment and related assets of Toshiba's 300mm wafer production line, mainly located at its Oita Operations facility. The purchase price of the Transfer is 19 billion yen. Sony and Toshiba aim to complete the Transfer within the fiscal year ending March 31, 2016. Following the Transfer, Sony and SCK plan to operate the semiconductor fabrication facilities as fabrication facilities of SCK, primarily for manufacturing CMOS image sensors.

Sony is to offer the employees of Toshiba and its affiliates employed at the fabrication facilities, as well as certain employees involved in areas such as CMOS sensor engineering and design (approximately 1,100 employees in total), employment within the Sony Group.

At the time of construction of Oita fab in 2004, Toshiba said that it is to "be the first semiconductor facility in the world to deploy 65-nanometer process and design technologies. ...A 200 billion yen investment program from FY2003 to FY2007, will bring production capacity to 12,500 wafers a month. This could be further expanded if necessary, up to a capacity of 17,500 wafers a month, with further investment."

Toshiba will transfer facilities, equipment and related assets of Toshiba's 300mm wafer production line, mainly located at its Oita Operations facility. The purchase price of the Transfer is 19 billion yen. Sony and Toshiba aim to complete the Transfer within the fiscal year ending March 31, 2016. Following the Transfer, Sony and SCK plan to operate the semiconductor fabrication facilities as fabrication facilities of SCK, primarily for manufacturing CMOS image sensors.

Sony is to offer the employees of Toshiba and its affiliates employed at the fabrication facilities, as well as certain employees involved in areas such as CMOS sensor engineering and design (approximately 1,100 employees in total), employment within the Sony Group.

At the time of construction of Oita fab in 2004, Toshiba said that it is to "be the first semiconductor facility in the world to deploy 65-nanometer process and design technologies. ...A 200 billion yen investment program from FY2003 to FY2007, will bring production capacity to 12,500 wafers a month. This could be further expanded if necessary, up to a capacity of 17,500 wafers a month, with further investment."

Omnivision Reports Quarterly Earnings

PRNewswire: OmniVision reports the results for its fiscal quarter that ended on October 31, 2015. Revenues were $343.1M, as compared to $329.9M in the previous quarter, and $394.0M ia year ago. GAAP net income was $13.9M, as compared to $18.2M in the previous quarter, and $28.0M a year ago.

GAAP gross margin was 21.9%, as compared to 22.6% for the previous quarter and 22.0% a year ago. The decrease in gross margin was attributable to broad-based price erosions, particularly in the mobile phone market.

The Company ended the period with cash, cash equivalents and short-term investments totaling $613.5M, an increase of $19.7M from the previous quarter.

"We are very pleased with our second quarter results. We have exceeded our own expectations, despite demand uncertainties in our various target markets," said Shaw Hong, CEO of OmniVision. "Regardless of these short-term uncertainties, we will continue to invest in the future of our business, and ultimately, return to our long-term growth trajectory."

Based on current trends, the Company expects revenues for the next quarter in the range of $310M to $340M.

GAAP gross margin was 21.9%, as compared to 22.6% for the previous quarter and 22.0% a year ago. The decrease in gross margin was attributable to broad-based price erosions, particularly in the mobile phone market.

The Company ended the period with cash, cash equivalents and short-term investments totaling $613.5M, an increase of $19.7M from the previous quarter.

"We are very pleased with our second quarter results. We have exceeded our own expectations, despite demand uncertainties in our various target markets," said Shaw Hong, CEO of OmniVision. "Regardless of these short-term uncertainties, we will continue to invest in the future of our business, and ultimately, return to our long-term growth trajectory."

Based on current trends, the Company expects revenues for the next quarter in the range of $310M to $340M.

Toshiba Image Recognition Processors

Toshiba publishes a couple of Youtube videos on its TMPV75 and TMPV76 Series Image Recognition Processors Applications in ADAS and other applications:

IS&T 2016 Electronic Imaging Symposium

2016 Electronic Imaging Symposium is organized by IS&T without SPIE. The Symposium is to be held in San Francisco, CA, on February 14-18, 2016. Its preliminary agenda has been published and has a broad collection of image sensor presentations. Below is a list of them in Image Sensors and Imaging Systems path:

A high dynamic range linear vision sensor with event asynchronous and frame-based synchronous operation

Juan A. Leñero-Bardallo, Ricardo Carmona-Galán, and Angel Rodríguez-Vázquez, Universidad de Sevilla (Spain)

A dual-core highly programmable 120dB image sensor

Benoit Dupont, Pyxalis (France)

High dynamic range challenges

Short presentation by Arnaud Darmont

Image sensor with organic photoconductive films by stacking the red/green and blue components

Tomomi Takagi, Toshikatu Sakai, Kazunori Miyakawa, and Mamoru Furuta; NHK Science & Technology Research Laboratories and Kochi University of Technology (Japan)

High-sensitivity CMOS image sensor overlaid with Ga2O3/CIGS heterojunction photodiode

Kazunori Miyakawa, Shigeyuki Imura, Misao Kubota, Kenji Kikuchi, Tokio Nakada, Toru Okino, Yutaka Hirose, Yoshihisa Kato, and Nobukazu Teranishi; NHK Science and Technology Research Laboratories, NHK Sapporo Station, Tokyo University of Science, Panasonic Corporation, University of Hyogo, and 6Shizuoka University (Japan)

Sub-micron pixel CMOS image sensor with new color filter patterns

Biay-Cheng Hseih, Sergio Goma, Hasib Siddiqui, Kalin Atanassov, Jiafu Luo, RJ Lin, Hy Cheng, Kuoyu Chou, JJ Sze, and Calvin Chao; Qualcomm Technologies Inc. (United States) and TSMC (Taiwan)

A CMOS image sensor with variable frame rate for low-power operation

Byoung-Soo Choi, Sung-Hyun Jo, Myunghan Bae, Sang-Hwan Kim, and Jang-Kyoo Shin, Kyungpook National University (South Korea)

ADC techniques for optimized conversion time in CMOS image sensors

Cedric Pastorelli and Pascal Mellot; ANRT and STMicroelectronics (France)

Miniature lensless computational infrared imager

Evan Erickson, Mark Kellam, Patrick Gill, James Tringali, and David Stork, Rambus (United States)

Focal-plane scale space generation with a 6T pixel architecture

Fernanda Oliveira, José Gabriel Gomes, Ricardo Carmona-Galán, Jorge Fernández-Berni, and Angel Rodríguez-Vázquez; Universidade Federal do Rio de Janeiro (Brazil) and Instituto de Microelectrónica de Sevilla (Spain)

Development of an 8K full-resolution single-chip image acquisition system

Tomohiro Nakamura, Ryohei Funatsu, Takahiro Yamasaki, Kazuya Kitamura, and Hiroshi Shimamoto, Japan Broadcasting Corporation (NHK) (Japan)

Characterization of VNIR hyperspectral sensors with monolithically integrated optical filters

Prashant Agrawal, Klaas Tack, Bert Geelen, Bart Masschelein, Pablo Mateo Aranda Moran, Andy Lambrechts, and Murali Jayapala; Imec and TMC (Belgium)

A 1.12-um pixel CMOS image sensor survey

Clemenz Portmann, Lele Wang, Guofeng Liu, Ousmane Diop, and Boyd Fowler, Google Inc (United States)

A comparative noise analysis and measurement for n-type and p-type pixels with CMS technique

Xiaoliang Ge, Bastien Mamdy, and Albert Theuwissen; Technische Univ. Delft (Netherlands), STMicroelectronics, Universite Claude Bernard Lyon 1 (France), and Harvest Imaging (Belgium)

Increases in hot pixel development rates for small digital pixel sizes

Glenn Chapman, Rahul Thomas, Rohan Thomas, Klinsmann Meneses, Tony Yang, Israel Koren, and Zahava Koren; Simon Fraser Univ. (Canada) and Univ. of Massachusetts Amherst (United States)

EMVA1288 3.1rc2 and research on version 3.2 and next

Arnaud Darmont and Adrien Lombet, APHESA SPRL (Belgium)

A time-of-flight CMOS range image sensor using 4-tap output pixels with lateral-electric-field control

Taichi Kasugai, Sang-Man Han, Hanh Trang, Taishi Takasawa, Satoshi Aoyama, Keita Yasutomi, Keiichiro Kagawa, and Shoji Kawahito; Shizuoka Univ. and Brookman Technology (Japan)

Design, implementation and evaluation of a TOF range image sensor using multi-tap lock-in pixels with cascaded charge draining and modulating gates

Trang Nguyen, Taichi Kasugai, Keigo Isobe, Sang-Man Han, Taishi Takasawa, De XIng Lioe, Keita Yasutomi, Keiichiro Kagawa, and Shoji Kawahito; Shizuoka Univ. and Brookman Technology (Japan)

A high dynamic range linear vision sensor with event asynchronous and frame-based synchronous operation

Juan A. Leñero-Bardallo, Ricardo Carmona-Galán, and Angel Rodríguez-Vázquez, Universidad de Sevilla (Spain)

A dual-core highly programmable 120dB image sensor

Benoit Dupont, Pyxalis (France)

High dynamic range challenges

Short presentation by Arnaud Darmont

Image sensor with organic photoconductive films by stacking the red/green and blue components

Tomomi Takagi, Toshikatu Sakai, Kazunori Miyakawa, and Mamoru Furuta; NHK Science & Technology Research Laboratories and Kochi University of Technology (Japan)

High-sensitivity CMOS image sensor overlaid with Ga2O3/CIGS heterojunction photodiode

Kazunori Miyakawa, Shigeyuki Imura, Misao Kubota, Kenji Kikuchi, Tokio Nakada, Toru Okino, Yutaka Hirose, Yoshihisa Kato, and Nobukazu Teranishi; NHK Science and Technology Research Laboratories, NHK Sapporo Station, Tokyo University of Science, Panasonic Corporation, University of Hyogo, and 6Shizuoka University (Japan)

Sub-micron pixel CMOS image sensor with new color filter patterns

Biay-Cheng Hseih, Sergio Goma, Hasib Siddiqui, Kalin Atanassov, Jiafu Luo, RJ Lin, Hy Cheng, Kuoyu Chou, JJ Sze, and Calvin Chao; Qualcomm Technologies Inc. (United States) and TSMC (Taiwan)

A CMOS image sensor with variable frame rate for low-power operation

Byoung-Soo Choi, Sung-Hyun Jo, Myunghan Bae, Sang-Hwan Kim, and Jang-Kyoo Shin, Kyungpook National University (South Korea)

ADC techniques for optimized conversion time in CMOS image sensors

Cedric Pastorelli and Pascal Mellot; ANRT and STMicroelectronics (France)

Miniature lensless computational infrared imager

Evan Erickson, Mark Kellam, Patrick Gill, James Tringali, and David Stork, Rambus (United States)

Focal-plane scale space generation with a 6T pixel architecture

Fernanda Oliveira, José Gabriel Gomes, Ricardo Carmona-Galán, Jorge Fernández-Berni, and Angel Rodríguez-Vázquez; Universidade Federal do Rio de Janeiro (Brazil) and Instituto de Microelectrónica de Sevilla (Spain)

Development of an 8K full-resolution single-chip image acquisition system

Tomohiro Nakamura, Ryohei Funatsu, Takahiro Yamasaki, Kazuya Kitamura, and Hiroshi Shimamoto, Japan Broadcasting Corporation (NHK) (Japan)

Characterization of VNIR hyperspectral sensors with monolithically integrated optical filters

Prashant Agrawal, Klaas Tack, Bert Geelen, Bart Masschelein, Pablo Mateo Aranda Moran, Andy Lambrechts, and Murali Jayapala; Imec and TMC (Belgium)

A 1.12-um pixel CMOS image sensor survey

Clemenz Portmann, Lele Wang, Guofeng Liu, Ousmane Diop, and Boyd Fowler, Google Inc (United States)

A comparative noise analysis and measurement for n-type and p-type pixels with CMS technique

Xiaoliang Ge, Bastien Mamdy, and Albert Theuwissen; Technische Univ. Delft (Netherlands), STMicroelectronics, Universite Claude Bernard Lyon 1 (France), and Harvest Imaging (Belgium)

Increases in hot pixel development rates for small digital pixel sizes

Glenn Chapman, Rahul Thomas, Rohan Thomas, Klinsmann Meneses, Tony Yang, Israel Koren, and Zahava Koren; Simon Fraser Univ. (Canada) and Univ. of Massachusetts Amherst (United States)

EMVA1288 3.1rc2 and research on version 3.2 and next

Arnaud Darmont and Adrien Lombet, APHESA SPRL (Belgium)

A time-of-flight CMOS range image sensor using 4-tap output pixels with lateral-electric-field control

Taichi Kasugai, Sang-Man Han, Hanh Trang, Taishi Takasawa, Satoshi Aoyama, Keita Yasutomi, Keiichiro Kagawa, and Shoji Kawahito; Shizuoka Univ. and Brookman Technology (Japan)

Design, implementation and evaluation of a TOF range image sensor using multi-tap lock-in pixels with cascaded charge draining and modulating gates

Trang Nguyen, Taichi Kasugai, Keigo Isobe, Sang-Man Han, Taishi Takasawa, De XIng Lioe, Keita Yasutomi, Keiichiro Kagawa, and Shoji Kawahito; Shizuoka Univ. and Brookman Technology (Japan)

Sharp Imager Sales Growth Slows

Sharp has presented its quarterly results about a month ago. It appears that the company's imager sales have peaked about half a year ago and slowly go down now:

Photoneo Wins Slovakia StartUpAwards

Photoneo reports that it is the winner of StartupAwards.sk for 2015 in Science category.

In a separate news, the company CEO Jan Zizka was selected to the group of 100 leaders of change in NEWEUROPE100 initiative. This list showcases people that will be the drivers of change in Central and Eastern Europe in the near future.

In a separate news, the company CEO Jan Zizka was selected to the group of 100 leaders of change in NEWEUROPE100 initiative. This list showcases people that will be the drivers of change in Central and Eastern Europe in the near future.

CMOSIS Pre-Announces Fast & Large 2MP Sensor

Next week, CMOSIS will introduce the CSI2100 2MP CMOS sensor. Its features include a 1440 x 1440 pixel array with 12 µm B&W GS pixels and a 500 fps frame rate in 10-bit mode. The sensor boasts a a full well capacity of 2 Me-/pixel and is optimized to detect small signal variations in bright images captured at high-speed. This makes it suitable for medical, scientific and industrial applications. The CSI2100 evaluation kit was co-developed with Pleora Technologies.

UMC CIS MPW

Europractice publishes its 2016 MPW prices and schedules. It looks like that other than TowerJazz, UMC becomes one of the biggest CIS MPW providers, including 110nm CIS process:

|

|

| 5 - block size 5mm x 5mm, 6 - block size 4mm x 4mm Discouted prices are available only to Europractice members and only for educational or publicly funded research use |

Yole Report on IR Image Sensors

Yole Developpement "Infrared Detector Technology & Market Trends (2015 edition)" report estimates the total IR detector market at 247M units in 2014 and a global revenue of US$ 209M. Yole’s analysts highlight the rapid growth of +14% between 2015 and 2020 (in units). Five applications among the nine applications will drive the IR detector market revenue growth: spot thermometry in mobiles devices, motion detection, smart building, HVAC and other medium array applications and people counting. “The next growth opportunities will be outside traditional markets, in smart buildings and mobile devices,” comments Yann de Charentenay, Senior, Technology & Market Analyst at Yole.

Interview with Martin Wäny

Martin Wäny, the founder and CEO of Awaiba, now CMOSIS, gives an interview on endoscopic imaging to News Medical site. Few interesting quotes:

"The miniaturization, and in particular the usage of semiconductor miniaturization technologies, allows us to build smaller, in volume and lower priced endoscopic cameras. This enables the proliferation of disposable endoscopes for a wide range of applications.

Is it possible to introduce 3D visualization using the mini optical modules?

Yes, for that purpose CMOSIS already offers endoscopic stereo camera modules. Multiple camera modules can provide 3D image information as needed for dental applications, or for the absolute measurement of features in laparoscopy or gastroenterology."

"The miniaturization, and in particular the usage of semiconductor miniaturization technologies, allows us to build smaller, in volume and lower priced endoscopic cameras. This enables the proliferation of disposable endoscopes for a wide range of applications.

Is it possible to introduce 3D visualization using the mini optical modules?

Yes, for that purpose CMOSIS already offers endoscopic stereo camera modules. Multiple camera modules can provide 3D image information as needed for dental applications, or for the absolute measurement of features in laparoscopy or gastroenterology."

|

| CMOSIS-Awaiba Naneye Camera |

XIMEA on IMEC Multispectral Camera

German camera maker XIMEA publishes a Vimeo talk about its hyperspectral cameras based on IMEC sensors:

Noiseless Frame Summation in CMOS Sensors

As promised in comments to the earlier post on sub-electron pixel noise, here is the presentation on the noiseless frame summation in the regular charge-transfer pixel that can allow DR expansion while maintaining the usual 4T pixel noise level:

The above slides complete the description of the noiseless frame summation general idea. There are few practical issues that need to be addressed though:

The above slides complete the description of the noiseless frame summation general idea. There are few practical issues that need to be addressed though:

Subscribe to:

Comments (Atom)