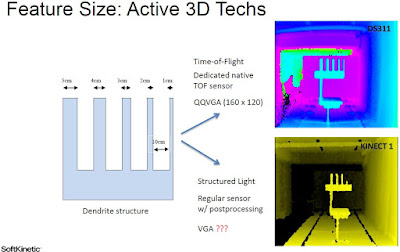

AutoSens 2016 kindly permitted me to post a couple of slides from Softkinetic presentation "3D depth-sensing for automotive: bringing awareness to the next generation of (autonomous) vehicles" by Daniel Van Nieuwenhove. A good part of the presentation compares ToF with active and passive stereo solutions:

Samsung System LSI Might Split into Fabless and Foundry Businesses

According to BusinessKorea sources, Samsung is contemplating to split its semiconductor business into a fabless and foundry divisions:

"Samsung Electronics’ System LSI business division is largely divided into four segments; system on chip (SoC) team which develops mobile APs, LSI development team, which designs display driver chips and camera sensors, foundry business team and support team. According to many officials in the industry, Samsung Electronics is now considering forming the fabless division by uniting the SoC and LSI development teams and separating from the foundry business."

"Samsung Electronics’ System LSI business division is largely divided into four segments; system on chip (SoC) team which develops mobile APs, LSI development team, which designs display driver chips and camera sensors, foundry business team and support team. According to many officials in the industry, Samsung Electronics is now considering forming the fabless division by uniting the SoC and LSI development teams and separating from the foundry business."

Nikon Applies for 2-Layer Stacked PDAF Patent

NikonRumors quotes Egami talking about Nikon patent application with 2-layered pixel array forming a cross-type PDAF: "Nikon patent application is to use the two imaging elements having different phase difference detection direction in order to achieve a cross-type AF."

Thanks to TG for the link!

Thanks to TG for the link!

Sony, ON Semi Image Sensor Innovations

Framos publishes interviews with Framos North America VP Sebastian Dignard and ON Semi Go To Market Manager Michael DeLuca on image sensor innovations:

Edoardo Charbon and Junichi Nakamura Elevated to IEEE Fellows

Edoardo Charbon and Junichi Nakamura has been elevated to IEEE Fellows. Thanks to AT for the info!

More on Hitachi Lensless Camera

Nikkei publishes an article with more details on Hitachi lens-less camera:

"In general, the method that Hitachi employed for its lens-less camera uses a "moire stripe" that can be obtained by stacking two concentric-patterned films with a certain interval and transmitting light through them. The numerous light-emitting points constituting the image influence the pitch and orientation of the moire stripe. The location of light, etc can be restored by applying two-dimensional Fourier transformation to the moire stripe.

This time, Hitachi replaced one of the films (one that is closer to the image sensor) with image processing. In other words, one film is placed with an interval of about 1mm, but the other film does not actually exist. And, instead of using the second film, a concentric pattern is superimposed on image data."

"In general, the method that Hitachi employed for its lens-less camera uses a "moire stripe" that can be obtained by stacking two concentric-patterned films with a certain interval and transmitting light through them. The numerous light-emitting points constituting the image influence the pitch and orientation of the moire stripe. The location of light, etc can be restored by applying two-dimensional Fourier transformation to the moire stripe.

This time, Hitachi replaced one of the films (one that is closer to the image sensor) with image processing. In other words, one film is placed with an interval of about 1mm, but the other film does not actually exist. And, instead of using the second film, a concentric pattern is superimposed on image data."

Image Sensors at ISSCC 2017

ISSCC publishes its 2017 advance program. Sony is going to present its 3-die stacked sensor, a somewhat expected evolution of the stacking technology:

A 1/2.3in 20Mpixel 3-Layer Stacked CMOS Image Sensor with DRAM

T. Haruta, T. Nakajima, J. Hashizume, T. Umebayashi, H. Takahashi, K. Taniguchi, M. Kuroda, H. Sumihiro, K. Enoki, T. Yamasaki, K. Ikezawa, A. Kitahara, M. Zen, M. Oyama, H. Koga, H. Tsugawa, T. Ogita, T. Nagano, S. Takano, T. Nomoto

Sony Semiconductor Solutions, Atsugi, Japan

Sony Semiconductor Manufacturing, Atsugi, Japan

Sony LSI Design, Atsugi, Japan

Canon presents, apparently, a version of its IEDM global shutter sensor paper with more emphasis on the readout architecture:

A 1.8erms Temporal Noise Over 110dB Dynamic Range 3.4μm Pixel Pitch Global Shutter CMOS Image Sensor with Dual-Gain Amplifiers, SS-ADC and Multiple-Accumulation Shutter

M. Kobayashi, Y. Onuki, K. Kawabata, H. Sekine, T. Tsuboi, Y. Matsuno, H. Takahashi, T. Koizumi, K. Sakurai, H. Yuzurihara, S. Inoue, T. Ichikawa

Canon, Kanagawa, Japan

Other nice papers in the Imager session are listed below:

A 640×480 Dynamic Vision Sensor with a 9μm Pixel and 300Meps Address-Event Representation

B. Son, Y. Suh, S. Kim, H. Jung, J-S. Kim, C. Shin, K. Park, K. Lee, J. Park, J. Woo, Y. Roh, H. Lee, Y. Wang, I. Ovsiannikov, H. Ryu

Samsung Advanced Institute of Technology, Suwon, Korea;

Samsung Electronics, Pasadena, CA

A Fully Integrated CMOS Fluorescence Biochip for Multiplex Polymerase Chain-Reaction (PCR) Processes

A. Hassibi, R. Singh, A. Manickam, R. Sinha, B. Kuimelis, S. Bolouki, P. Naraghi-Arani, K. Johnson, M. McDermott, N. Wood, P. Savalia, N. Gamini

InSilixa, Sunnyvale, CA

A Programmable Sub-Nanosecond Time-Gated 4-Tap Lock-In Pixel CMOS Image Sensor for Real-Time Fluorescence Lifetime Imaging Microscopy

M-W. Seo, Y. Shirakawa, Y. Masuda, Y. Kawata, K. Kagawa, K. Yasutomi, S. Kawahito

Shizuoka University, Hamamatsu, Japan

A Sub-nW 80mlx-to-1.26Mlx Self-Referencing Light-to-Digital Converter with AlGaAs Photodiode

W. Lim, D. Sylvester, D. Blaauw

University of Michigan, Ann Arbor, MI

A 2.1Mpixel Organic-Film Stacked RGB-IR Image Sensor with Electrically Controllable IR Sensitivity

S. Machida, S. Shishido, T. Tokuhara, M. Yanagida, T. Yamada, M. Izuchi, Y. Sato, Y. Miyake, M. Nakata, M. Murakami, M. Harada, Y. Inoue

Panasonic, Osaka, Japan

A 0.44erms Read-Noise 32fps 0.5Mpixel High-Sensitivity RG-Less-Pixel CMOS Image Sensor Using Bootstrapping Reset

M-W. Seo, T. Wang, S-W. Jun, T. Akahori, S. Kawahito

Shizuoka University, Hamamatsu, Japan;

Brookman Technology, Hamamatsu, Japan

A 1ms High-Speed Vision Chip with 3D-Stacked 140GOPS ColumnParallel PEs for Spatio-Temporal Image Processing

T. Yamazaki, H. Katayama, S. Uehara, A. Nose, M. Kobayashi, S. Shida, M. Odahara, K. Takamiya, Y. Hisamatsu, S. Matsumoto, L. Miyashita, Y. Watanabe, T. Izawa, Y. Muramatsu, M. Ishikawa;

Sony Semiconductor Solutions, Atsugi, Japan;

Sony LSI Design, Atsugi, Japan

University of Tokyo, Bunkyo, Japan

The tutorials day includes one on deep learning processors:

Energy-Efficient Processors for Deep Learning

Marian Verhelst

KU Leuven, Heverlee, Belgium

A 1/2.3in 20Mpixel 3-Layer Stacked CMOS Image Sensor with DRAM

T. Haruta, T. Nakajima, J. Hashizume, T. Umebayashi, H. Takahashi, K. Taniguchi, M. Kuroda, H. Sumihiro, K. Enoki, T. Yamasaki, K. Ikezawa, A. Kitahara, M. Zen, M. Oyama, H. Koga, H. Tsugawa, T. Ogita, T. Nagano, S. Takano, T. Nomoto

Sony Semiconductor Solutions, Atsugi, Japan

Sony Semiconductor Manufacturing, Atsugi, Japan

Sony LSI Design, Atsugi, Japan

Canon presents, apparently, a version of its IEDM global shutter sensor paper with more emphasis on the readout architecture:

A 1.8erms Temporal Noise Over 110dB Dynamic Range 3.4μm Pixel Pitch Global Shutter CMOS Image Sensor with Dual-Gain Amplifiers, SS-ADC and Multiple-Accumulation Shutter

M. Kobayashi, Y. Onuki, K. Kawabata, H. Sekine, T. Tsuboi, Y. Matsuno, H. Takahashi, T. Koizumi, K. Sakurai, H. Yuzurihara, S. Inoue, T. Ichikawa

Canon, Kanagawa, Japan

Other nice papers in the Imager session are listed below:

A 640×480 Dynamic Vision Sensor with a 9μm Pixel and 300Meps Address-Event Representation

B. Son, Y. Suh, S. Kim, H. Jung, J-S. Kim, C. Shin, K. Park, K. Lee, J. Park, J. Woo, Y. Roh, H. Lee, Y. Wang, I. Ovsiannikov, H. Ryu

Samsung Advanced Institute of Technology, Suwon, Korea;

Samsung Electronics, Pasadena, CA

A Fully Integrated CMOS Fluorescence Biochip for Multiplex Polymerase Chain-Reaction (PCR) Processes

A. Hassibi, R. Singh, A. Manickam, R. Sinha, B. Kuimelis, S. Bolouki, P. Naraghi-Arani, K. Johnson, M. McDermott, N. Wood, P. Savalia, N. Gamini

InSilixa, Sunnyvale, CA

A Programmable Sub-Nanosecond Time-Gated 4-Tap Lock-In Pixel CMOS Image Sensor for Real-Time Fluorescence Lifetime Imaging Microscopy

M-W. Seo, Y. Shirakawa, Y. Masuda, Y. Kawata, K. Kagawa, K. Yasutomi, S. Kawahito

Shizuoka University, Hamamatsu, Japan

A Sub-nW 80mlx-to-1.26Mlx Self-Referencing Light-to-Digital Converter with AlGaAs Photodiode

W. Lim, D. Sylvester, D. Blaauw

University of Michigan, Ann Arbor, MI

A 2.1Mpixel Organic-Film Stacked RGB-IR Image Sensor with Electrically Controllable IR Sensitivity

S. Machida, S. Shishido, T. Tokuhara, M. Yanagida, T. Yamada, M. Izuchi, Y. Sato, Y. Miyake, M. Nakata, M. Murakami, M. Harada, Y. Inoue

Panasonic, Osaka, Japan

A 0.44erms Read-Noise 32fps 0.5Mpixel High-Sensitivity RG-Less-Pixel CMOS Image Sensor Using Bootstrapping Reset

M-W. Seo, T. Wang, S-W. Jun, T. Akahori, S. Kawahito

Shizuoka University, Hamamatsu, Japan;

Brookman Technology, Hamamatsu, Japan

A 1ms High-Speed Vision Chip with 3D-Stacked 140GOPS ColumnParallel PEs for Spatio-Temporal Image Processing

T. Yamazaki, H. Katayama, S. Uehara, A. Nose, M. Kobayashi, S. Shida, M. Odahara, K. Takamiya, Y. Hisamatsu, S. Matsumoto, L. Miyashita, Y. Watanabe, T. Izawa, Y. Muramatsu, M. Ishikawa;

Sony Semiconductor Solutions, Atsugi, Japan;

Sony LSI Design, Atsugi, Japan

University of Tokyo, Bunkyo, Japan

The tutorials day includes one on deep learning processors:

Energy-Efficient Processors for Deep Learning

Marian Verhelst

KU Leuven, Heverlee, Belgium

Basler's First ToF Camera Enters Series Production

Basler: After a successful conclusion of the evaluation phase and extremely positive customer feedback, Basler first ToF camera is now entering series production. The VGA ToF camera is said to stand out for its combination of high resolution and powerful features at a very attractive price. This outstanding price/performance ratio puts the Basler ToF camera in a unique position on the market and distinguishes it significantly from competitors' cameras.

The Basler ToF camera operates on the pulsed time-of-flight principle. It is outfitted with eight high-power LEDs working in the NIR range, and generates 2D and 3D data in one shot with a multipart image, comprised of range, intensity and confidence maps. It delivers distance values in a working range from 0 to 13.3 meters at 20fps. The measurement accuracy of the Basler ToF camera is +/-1 cm at a range from 0.5 to 5.8 meters, while consuming 15W of power.

The Basler ToF camera operates on the pulsed time-of-flight principle. It is outfitted with eight high-power LEDs working in the NIR range, and generates 2D and 3D data in one shot with a multipart image, comprised of range, intensity and confidence maps. It delivers distance values in a working range from 0 to 13.3 meters at 20fps. The measurement accuracy of the Basler ToF camera is +/-1 cm at a range from 0.5 to 5.8 meters, while consuming 15W of power.

Movidius on IoT Vision Applications

ARMDevices interviews Movidius VP Marketing, Gary Brown, on the company low power advantages of their vision processor in different applications:

Jaroslav Hynecek Gets 2016 EDS J.J. Ebers Award

IEEE Electron Devices Society publishes a list of this year's awards. Jaroslav Hynecek receives 2016 EDS J.J. Ebers Award for "For the pioneering work and advancement of CCD and CMOS image sensor technologies." The Award is to be presented at IEDM in December.

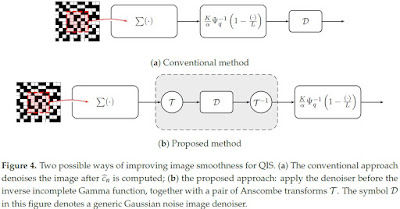

Image Reconstruction for Quanta Image Sensors

Open-access Sensors journal publishes Purdue University paper "Images from Bits: Non-Iterative Image Reconstruction for Quanta Image Sensors" by Stanley H. Chan, Omar A. Elgendy, and Xiran Wang. From the abstract:

"Because of the stochastic nature of the photon arrivals, data acquired by QIS is a massive stream of random binary bits. The goal of image reconstruction is to recover the underlying image from these bits. In this paper, we present a non-iterative image reconstruction algorithm for QIS. Unlike existing reconstruction methods that formulate the problem from an optimization perspective, the new algorithm directly recovers the images through a pair of nonlinear transformations and an off-the-shelf image denoising algorithm. By skipping the usual optimization procedure, we achieve orders of magnitude improvement in speed and even better image reconstruction quality. We validate the new algorithm on synthetic datasets, as well as real videos collected by one-bit single-photon avalanche diode (SPAD) cameras."

Thanks to EF for the link!

"Because of the stochastic nature of the photon arrivals, data acquired by QIS is a massive stream of random binary bits. The goal of image reconstruction is to recover the underlying image from these bits. In this paper, we present a non-iterative image reconstruction algorithm for QIS. Unlike existing reconstruction methods that formulate the problem from an optimization perspective, the new algorithm directly recovers the images through a pair of nonlinear transformations and an off-the-shelf image denoising algorithm. By skipping the usual optimization procedure, we achieve orders of magnitude improvement in speed and even better image reconstruction quality. We validate the new algorithm on synthetic datasets, as well as real videos collected by one-bit single-photon avalanche diode (SPAD) cameras."

Thanks to EF for the link!

Xiling Demos Alexnet on FPGA

Xilinx publishes a demo of its neural learning network. Alexnet, although somewhat dated by modern standards, seems to remain the most popular for such capability statements:

Tesla New Self-Driving Hardware Demo

Tesla publishes a nice demo of its new self-driving platform, together with computer-interpreted images from its 3 cameras:

A longer version of this video is here.

A longer version of this video is here.

High EQE Broadband Photodiode

Opli quotes Nature Photonics paper "Near-unity quantum efficiency of broadband black silicon photodiodes with an induced junction" by Mikko A. Juntunen, Juha Heinonen, Ville Vähänissi, Päivikki Repo, Dileep Valluru & Hele Savin from Aalto University, Espoo, Finland. From the abstract:

"Present-day photodiodes notably suffer from optical losses and generated charge carriers are often lost via recombination. Here, we demonstrate a device with an external quantum efficiency above 96% over the wavelength range 250–950 nm. Instead of a conventional p–n junction, we use negatively charged alumina to form an inversion layer that generates a collecting junction extending to a depth of 30 µm in n-type silicon with bulk resistivity larger than 10 kΩ cm. We enhance the collection efficiency further by nanostructuring the photodiode surface, which results in higher effective charge density and increased charge-carrier concentration in the inversion layer. Additionally, nanostructuring and efficient surface passivation allow for a reliable device response with incident angles up to 70°."

Thanks to TG for the link!

"Present-day photodiodes notably suffer from optical losses and generated charge carriers are often lost via recombination. Here, we demonstrate a device with an external quantum efficiency above 96% over the wavelength range 250–950 nm. Instead of a conventional p–n junction, we use negatively charged alumina to form an inversion layer that generates a collecting junction extending to a depth of 30 µm in n-type silicon with bulk resistivity larger than 10 kΩ cm. We enhance the collection efficiency further by nanostructuring the photodiode surface, which results in higher effective charge density and increased charge-carrier concentration in the inversion layer. Additionally, nanostructuring and efficient surface passivation allow for a reliable device response with incident angles up to 70°."

Thanks to TG for the link!

AutoSens: Demystifying ADAS Camera Parameters

AutoSens conference kindly permitted me posting few slides from TI presentation "ADAS Front Camera (FC): Demystifying System Parameters" by Mihir Mody.

|

| Data from Car Stopping Distance Calculator |

Intel RealSense 400 Presentation

ARMDevices interviews Intel representative showing Relasense 3D camera talking about the 3D vision technology applications:

AR Glasses to Grow into $1b Market in 2017

PRNewswire: According to CCS Insight's report on wearable tech, shipments of AR and VR headsets forecast to grow 15 times to 96 million units by 2020, at a value of $14.5 billion. The report also indicates that the AR segment alone is expected to grow into a $1 billion business in 2017. As the technology continues to evolve AR products are expected to become an enterprise market opportunity, unlike the consumer focused VR.

Apple is said to be jumping on the smart-glasses market train and may launch a product by 2018. Bloomberg states Apple has recently opened up the idea during meetings with possible providers for components of augmented reality glasses and "has ordered small quantities of near-eye displays from one supplier" for testing purposes. The device would connect to the iPhone and present images over the wearer's vision - a la Google Glass.

Apple is said to be jumping on the smart-glasses market train and may launch a product by 2018. Bloomberg states Apple has recently opened up the idea during meetings with possible providers for components of augmented reality glasses and "has ordered small quantities of near-eye displays from one supplier" for testing purposes. The device would connect to the iPhone and present images over the wearer's vision - a la Google Glass.

Theses Roundup: PD Modeling, 0.4e- Noise, Sensor for Venus Mission

ISAE-SUPAERO publishes PhD Thesis "Estimation and modeling of key design parameters of pinned photodiode CMOS image sensors for high temporal resolution applications" by Alice Pelamatti. The thesis presents a fairly detailed overview of PPD static and dynamic effects:

EPFL publishes PhD Thesis "Ultra Low Noise CMOS Image Sensors" by Assim Boukhayma presenting a detailed description of the noises sources and techniques to achieve 0.4e- noise:

KTH Royal Institute of Technology, Sweden, publishes Thesis "Design considerations for a high temperature image sensor in 4H-SiC" by Marco Cristiano. The thesis discusses the approach to create a sensor for Venus Mission, capable to capture images at temperature of 460 degC at Venus surface.

EPFL publishes PhD Thesis "Ultra Low Noise CMOS Image Sensors" by Assim Boukhayma presenting a detailed description of the noises sources and techniques to achieve 0.4e- noise:

KTH Royal Institute of Technology, Sweden, publishes Thesis "Design considerations for a high temperature image sensor in 4H-SiC" by Marco Cristiano. The thesis discusses the approach to create a sensor for Venus Mission, capable to capture images at temperature of 460 degC at Venus surface.

HiScene, Inuitive, Heptagon Team on AR Glasses

Shoot: HiScene (not HiSense!), Inuitive and Heptagon have teamed to roll out AR Glasses, billed as HiScene’s next generation of Augmented Reality (AR )glasses. The companies worked together to develop a complete solution for advanced 3D depth sensing and AR/VR applications that delivers excellent performance even in changing light conditions and outdoors. HiAR Glasses incorporate Inuitive’s NU3000 Computer Vision Processor and Heptagon’s advanced illumination.

The glasses’ AR operating system provides stereoscopic interactivity, 3D gesture perception, intelligent speech recognition, natural image recognition, inertial measuring unit (IMU) displayed with an improved 3D graphical user interface.

“We are committed to providing the best possible user experience to our customers, and for this reason we have partnered with Inuitive and Heptagon to create the most intelligent AR glasses available on the market,” said Chris Liao, CEO of HiScene. “The technologies implemented provide a seamless experience in a robust and compact format, without compromising on battery life.”

Inuitive’s NU3000 serves AR Glasses by providing 3D depth sensing and computer vision capabilities. This solution acts also as a smart sensors hub to accurately time-stamp and synchronize multiple sensors in a manner that off-loads the application processor and shortens the development time. “Inuitive’s solution allows Hiscene to provide the reliability, latency and performance its customers expect,” said Shlomo Gadot, CEO of Inuitive. “With Inuitive technology, AR products and applications can now be used outdoors without the sunlight interfering or damaging their efficacy thanks to cameras featuring depth perception.”

The new HiScene AR glasses feature an impressive array of cameras under the hood:

The glasses’ AR operating system provides stereoscopic interactivity, 3D gesture perception, intelligent speech recognition, natural image recognition, inertial measuring unit (IMU) displayed with an improved 3D graphical user interface.

“We are committed to providing the best possible user experience to our customers, and for this reason we have partnered with Inuitive and Heptagon to create the most intelligent AR glasses available on the market,” said Chris Liao, CEO of HiScene. “The technologies implemented provide a seamless experience in a robust and compact format, without compromising on battery life.”

Inuitive’s NU3000 serves AR Glasses by providing 3D depth sensing and computer vision capabilities. This solution acts also as a smart sensors hub to accurately time-stamp and synchronize multiple sensors in a manner that off-loads the application processor and shortens the development time. “Inuitive’s solution allows Hiscene to provide the reliability, latency and performance its customers expect,” said Shlomo Gadot, CEO of Inuitive. “With Inuitive technology, AR products and applications can now be used outdoors without the sunlight interfering or damaging their efficacy thanks to cameras featuring depth perception.”

The new HiScene AR glasses feature an impressive array of cameras under the hood:

3rd International Workshop on Image Sensors and Imaging Systems (IWISS2016)

The 3rd International Workshop on Image Sensors and Imaging Systems (IWISS2016) is to be held at the Tokyo Institute of Technology on November 17-18, 2016. The agenda is quite impressive, consisting of mostly invited presentations:

- Ion implantation technology for image sensors (Prof. Nobukazu Teranishi, Dr. Genshu Fuse*, and Dr. Michiro Sugitani*, Shizuoka Univ./ Univ. of Hyogo, *Sumitomo Heavy Industries Ion Technology Co., Ltd., Japan)

- 3D-stacking architecture for low-noise high-speed image sensors (Prof. Shoji Kawahito, Shizuoka Univ., Japan)

- A Dead-time free global shutter stacked CMOS image sensor with in-pixel LOFIC and ADC using pixel-wise connections (Prof. Rihito Kuroda, Mr. Hidetake Sugo, Mr. Shunichi Wakashima, and Prof. Shigetoshi Sugawa, Tohoku Univ., Japan)

- 3D stacked image sensor featuring low noise inductive coupling channels (Prof. Masayuki Ikebe, Dr. Daisuke Uchida, Dr. Yasuhiro Take*, Prof. Tetsuya Asai, Prof. Tadahiro Kuroda*, and Prof. Masato Motomura, Hokkaido Univ., *Keio Univ., Japan)

- Low-noise CMOS image sensors towards single-photon detection (Prof. Min-Woong Seo, Prof. Keiichiro Kagawa, Prof. Keita Yasutomi, and Prof. Shoji Kawahito, Shizuoka Univ., Japan)

- Bioluminescence imaging in living animals (Prof. Takahiro Kuchimaru, Prof. Tetsuya Kadonosono, Prof. Shinae Kizaka-Kondoh, Tokyo Inst. of Tech., Japan)

- Restoration of a Poissonian-Gaussian color moving-image sequence (Prof. Takahiro Saito and Mr. Takashi Komatsu, Kanagawa Univ., Japan)

- Always-on CMOS image sensor: energy-efficient circuits and architecture (Prof. Jaehyuk Choi, Sungkyunkwan Univ., Korea)

- Low-voltage high-dynamic-range CMOS imager with energy harvesting (Prof. Chih-Cheng Hsieh and Mr. Albert Yen-Chih Chiou, National Tsing Hua Univ., Taiwan)

- Various ultra-high-speed imaging and applications by Streak camera (Mr. Koro Uchiyama, Hamamatsu Photonics K. K., Japan)

- Time-of-flight image sensors toward micrometer resolution (Prof. Keita Yasutomi and Prof. Shoji Kawahito, Shizuoka Univ., Japan)

- Toward the ultimate speed of silicon image sensors: from 4.5 kfps to Gfps and more (Prof. Goji Etoh, Osaka Univ., Japan)

- Studies on adaptive optics and application to the biological microscope (Prof. Masayuki Hattori, National Inst. for Basic Biology, Japan)

- Application of light-sheet microscopy to cell and development biology (Prof. Shigenori Nonaka, National Inst. for Basic Biology, Japan)

- Non-contact video based estimation for heart rate variability spectrogram using ambient light by extracting hemoglobin information (Prof. Norimichi Tsumura, Chiba Univ. , Japan)

- Development of ultraviolet- and visible-light one-shot spectral domain optical coherence tomography and in situ measurements of human skin (Mr. Heijiro Hirayama or Mr. Sohichiro Nakamura, FUJIFILM Corp., Japan)

- Extremely compact hyperspectral camera for drone and smartphone (Prof. Ichiro Ishimaru, Kagawa Univ., Japan)

Hitachi Lensless Camera Adjusts Focus after Image Capture

JCN Newswire: Hitachi develops a camera technology that can capture video without using a lens and adjust focus after the capture by using a film imprinted with a concentric-circle pattern instead of a lens. Since it acquires depth information in addition to planar information, it is possible to reproduce an image at an arbitrary point of focus even after the image has been captured. Hitachi is aiming to utilize this technology in a broad range of applications such as work support, automated driving, and human-behavior analysis with mobile devices, vehicles and robots.

Hitachi camera is based on the principle of Moiré fringes (that are generated from superposition of concentric circles)−that combines a function for adjusting focus after images are captured in the same manner as a light-field camera and features of thinness and lightness of a lensless camera which computational load incurred by image processing is reduced to 1/300. The two main features of the developed camera technology are described as follows.

(1) Image processing technology using Moiré fringes

A film patterned with concentric circles (whose interval narrow toward the edge of the film) is positioned in front of an image sensor, and the image of a shadow formed by a light beam irradiated onto the film is captured by the image sensor. During the image processing, a similar concentric-circle pattern is superimposed on the shadow and Moiré fringes with spacing dependent on the incidence angle of a light beam are formed. By utilizing the Moiré fringes, it is possible to capture images by Fourier transform.

(2) Focus adjustment technology of captured images

The focal position can be changed by changing the size of the concentric-circle pattern superimposed on the shadow formed on the image sensor by a light beam irradiated onto the film. By superposing the concentric-circle pattern by image processing after image capturing, the focal position can be adjusted freely.

To measure the performance of the developed technology, an experiment with a 1-cm2 image sensor and a film imprinted with a concentric-circle pattern positioned 1 mm from the sensor was conducted. The results of the experiment confirmed that video images could be captured at 30fps when a standard notebook PC was used for image processing.

Hitachi camera is based on the principle of Moiré fringes (that are generated from superposition of concentric circles)−that combines a function for adjusting focus after images are captured in the same manner as a light-field camera and features of thinness and lightness of a lensless camera which computational load incurred by image processing is reduced to 1/300. The two main features of the developed camera technology are described as follows.

(1) Image processing technology using Moiré fringes

A film patterned with concentric circles (whose interval narrow toward the edge of the film) is positioned in front of an image sensor, and the image of a shadow formed by a light beam irradiated onto the film is captured by the image sensor. During the image processing, a similar concentric-circle pattern is superimposed on the shadow and Moiré fringes with spacing dependent on the incidence angle of a light beam are formed. By utilizing the Moiré fringes, it is possible to capture images by Fourier transform.

(2) Focus adjustment technology of captured images

The focal position can be changed by changing the size of the concentric-circle pattern superimposed on the shadow formed on the image sensor by a light beam irradiated onto the film. By superposing the concentric-circle pattern by image processing after image capturing, the focal position can be adjusted freely.

To measure the performance of the developed technology, an experiment with a 1-cm2 image sensor and a film imprinted with a concentric-circle pattern positioned 1 mm from the sensor was conducted. The results of the experiment confirmed that video images could be captured at 30fps when a standard notebook PC was used for image processing.

Heptagon Announces 5m ToF Range Sensor

BusinessWire: Heptagon announces ELISA 3DRanger, calling it the world’s first 5m ToF 3DRanger Smart Sensor. ELISA increases the previous maximum range of 2m by over 2x, more than doubling the sensor’s ability to measure distances under certain conditions. When integrated with a smartphone camera, ELISA enables applications like a virtual measuring tape, security features, people counting, augmented reality, and enhanced gaming. The range extension also improves auto-focus applications. Other new features include SmudgeSense, the company’s proprietary active smudge detection and resilience technology, and a 2-in-1 Proximity Mode.

The 5m distance range was achieved in normal office lighting conditions using high accuracy mode with the target object covering the full 29deg FOV. In Proximity Mode, distances between 10mm and 80mm are measured and the sensor provides a flag when a user-defined threshold is reached.

ELISA SmudgeSense uses patented ToF smudge pixels to detect high levels of smudge in real time, and alert the user about a problem through software. Additional proprietary algorithms are employed to dynamically increase smudge resilience using the data provided by the smudge pixels. This leads to optimized system performance, not only during manufacturing test, but in real life.

Mass production of the new 3DRanger begins in December 2016.

The 5m distance range was achieved in normal office lighting conditions using high accuracy mode with the target object covering the full 29deg FOV. In Proximity Mode, distances between 10mm and 80mm are measured and the sensor provides a flag when a user-defined threshold is reached.

ELISA SmudgeSense uses patented ToF smudge pixels to detect high levels of smudge in real time, and alert the user about a problem through software. Additional proprietary algorithms are employed to dynamically increase smudge resilience using the data provided by the smudge pixels. This leads to optimized system performance, not only during manufacturing test, but in real life.

Mass production of the new 3DRanger begins in December 2016.

Sony Uses RGBC-IR Sensor to Improve White Balance

This 2 month old video shows that Sony uses RGBC-IR sensor to improve the white balance in a mobile phone:

Yole on Automotive Imaging: 371M Automotive Imaging Devices in 2021

Yole Developpement releases "Imaging technology transforming step by step the automotive industry: Yole’s analysts announce 371 million automotive imaging devices in 2021" report. Few of the statements in the report:

Growth of imaging for automotive is also being fueled by the park assist application, 360° surround view camera volume is therefore skyrocketing. While it’s becoming mandatory in the United-States to have a rearview camera by 2018, that uptake is dwarfed by 360° surround view cameras, which enable a “bird’s eye view” perspective. This trend is most beneficial to companies like Omnivision at sensor level and Panasonic and Valeo, which have become one the main manufacturers of automotive cameras.

Mirror replacement cameras are currently the big unknown and take-off will primarily depend on its appeal and car design regulation. Europe and Japan are at the forefront of this trend, which should become only slightly significant by 2021.

Solid state lidar is well talked about and will start to be found in high end cars by 2021. Cost reduction will be a key driver as the push for semi-autonomous driving will be felt more strongly by car manufacturers.

LWIR technology-based night vision cameras were initially perceived as a status symbol. However, they’re increasingly appreciated for their ability to automatically detect pedestrians and wildlife. LWIR solution will therefore become integrated into ADAS systems in future. From their side, 3D cameras will be limited to in-cabin infotainment and driver monitoring. This technology will be key for luxury cars and therefore is of limited use today.

- Imaging technology, which is currently mainly cameras, is exploding into the automotive space, and is set to grow at % CAGR to reach US$7.3B in 2021.

- Infotainment and ADAS propel automotive imaging.

- Imaging will transform the car industry en-route to the self-driving paradigm shift.

- A mazy technological roadmap will bring many opportunities.

- “From less than one camera per car on average in 2015, there will be more than three cameras per car by 2021”, announces Pierre Cambou, Activity Leader, Imaging at Yole. “It means 371 million automotive imaging devices”.

Growth of imaging for automotive is also being fueled by the park assist application, 360° surround view camera volume is therefore skyrocketing. While it’s becoming mandatory in the United-States to have a rearview camera by 2018, that uptake is dwarfed by 360° surround view cameras, which enable a “bird’s eye view” perspective. This trend is most beneficial to companies like Omnivision at sensor level and Panasonic and Valeo, which have become one the main manufacturers of automotive cameras.

Mirror replacement cameras are currently the big unknown and take-off will primarily depend on its appeal and car design regulation. Europe and Japan are at the forefront of this trend, which should become only slightly significant by 2021.

Solid state lidar is well talked about and will start to be found in high end cars by 2021. Cost reduction will be a key driver as the push for semi-autonomous driving will be felt more strongly by car manufacturers.

LWIR technology-based night vision cameras were initially perceived as a status symbol. However, they’re increasingly appreciated for their ability to automatically detect pedestrians and wildlife. LWIR solution will therefore become integrated into ADAS systems in future. From their side, 3D cameras will be limited to in-cabin infotainment and driver monitoring. This technology will be key for luxury cars and therefore is of limited use today.

Call for Nominations for the 2017 Walter Kosonocky Award

International Image Sensor Society calls for nominations for the 2017 Walter Kosonocky Award for Significant Advancement in Solid-State Image Sensors.

The Walter Kosonocky Award is presented bi-annually for THE BEST PAPER presented in any venue during the prior two years representing significant advancement in solid-state image sensors. The award commemorates the many important contributions made by the late Dr. Walter Kosonocky to the field of solid-state image sensors. Personal tributes to Dr. Kosonocky appeared in the IEEE Transactions on Electron Devices in 1997. Founded in 1997 by his colleagues in industry, government and academia, the award is also funded by proceeds from the International Image Sensor Workshop.

The award is selected from nominated papers by the Walter Kosonocky Award Committee, announced and presented at the International Image Sensor Workshop (IISW), and sponsored by the International Image Sensor Society (IISS).

The deadline for receiving nominations is February 6th, 2017. Your nominations should be sent to Junichi Nakamura (Brillnics Japan Inc.), Chair of the IISS Award Committee.

The Walter Kosonocky Award is presented bi-annually for THE BEST PAPER presented in any venue during the prior two years representing significant advancement in solid-state image sensors. The award commemorates the many important contributions made by the late Dr. Walter Kosonocky to the field of solid-state image sensors. Personal tributes to Dr. Kosonocky appeared in the IEEE Transactions on Electron Devices in 1997. Founded in 1997 by his colleagues in industry, government and academia, the award is also funded by proceeds from the International Image Sensor Workshop.

The award is selected from nominated papers by the Walter Kosonocky Award Committee, announced and presented at the International Image Sensor Workshop (IISW), and sponsored by the International Image Sensor Society (IISS).

The deadline for receiving nominations is February 6th, 2017. Your nominations should be sent to Junichi Nakamura (Brillnics Japan Inc.), Chair of the IISS Award Committee.

Autosens: DxO on ISP Challenges

Autosens 2016 Conference kindly permitted me to reproduce few slides from DxO presentation "Dealing with the Complexities of Camera ISP Tuning" by Clement Viard, R&D Sr. Director and Frederic Guichard, CTO & co-founder.

Pixart and Himax Report Quarterly Earnings

Pixart reports 5.5% QoQ better sales and a product mix shift away from the mouse sensors in Q3 2016:

Himax Q3 image sensor sales are worse than expected, although the company has not released any numbers. The image sensor growth is projected in the next quarter.

"Himax continues to make great progress with its new smart sensor areas by collaborating with certain heavyweight partners, including a major e-commerce customer, leading consumer electronics brands and a heavyweight international smartphone chipset maker. By pairing a DOE integrated WLO laser diode collimator with a near infrared CIS, the Company is offering the most effective total solution for 3D sensing and detection in the smallest form factor, which enables easy integration into next generation smartphones, AR/VR devices and consumer electronics. Similarly, the ultra-low-power QVGA CMOS image sensor can also be bundled with the Company’s WLO lens to support super low power computer vision to enable new applications across mobile devices, consumer electronics, surveillance, drones, IoT and artificial intelligence. The Company will report business developments in these new territories in due course.

Regarding other CIS products, the Company maintains a leading position in laptop applications and will increase shipments for multimedia applications such as surveillance, drones, home appliances, consumer electronics, etc."

Himax Q3 image sensor sales are worse than expected, although the company has not released any numbers. The image sensor growth is projected in the next quarter.

"Himax continues to make great progress with its new smart sensor areas by collaborating with certain heavyweight partners, including a major e-commerce customer, leading consumer electronics brands and a heavyweight international smartphone chipset maker. By pairing a DOE integrated WLO laser diode collimator with a near infrared CIS, the Company is offering the most effective total solution for 3D sensing and detection in the smallest form factor, which enables easy integration into next generation smartphones, AR/VR devices and consumer electronics. Similarly, the ultra-low-power QVGA CMOS image sensor can also be bundled with the Company’s WLO lens to support super low power computer vision to enable new applications across mobile devices, consumer electronics, surveillance, drones, IoT and artificial intelligence. The Company will report business developments in these new territories in due course.

Regarding other CIS products, the Company maintains a leading position in laptop applications and will increase shipments for multimedia applications such as surveillance, drones, home appliances, consumer electronics, etc."

ActLight Announces Heartrate Sensing Project in Cooperation With ON Semi

PRNewswire: ActLight, a Swiss company known for its Dynamic Photo Diodes (DPD), announces a project of next generation sensors for low energy heartrate sensing for wearable devices in co-operation with ON Semiconductor.

"The healthcare industry is becoming more reliant on new methods to monitor and treat patients. This - along with an increased interest in fitness and wellness - has necessitated more affordable, precise, wearable sensing options," commented Jakob Nielsen, Senior Manager Consumer Health Product Line at ON Semiconductor. "Today's smartwatches and wearables fall short of meeting customer requirements when it comes to heartrate measurement precision and application battery life time. Working with ActLight we will help to bring next generation heartrate sensors to the market that will address these requirements and deliver a full sensing solution for integration in a multitude of MedTech and consumer electronics applications."

"Our technology offers unique competitive advantages to our partners when compared to existing Photo Diodes used in wearable heartrate solutions," stated Serguei Okhonin, CEO of ActLight. "These include lower power consumption, simplified electronics and smaller footprint making it perfectly suited for miniaturized wearable devices. We are happy to see the support from ON Semiconductor and them sharing the potential of our technology in the area of heartrate sensing."

ActLight's DPD principle is presented in the company's Youtube video:

PRWeb: ActLight also announces a ToF sensor based on its DPD.

“ActLight’s ToF technology is pretty remarkable,” commented Jean-Luc Jaffard, Consultant & Advisor at Red Belt SA and a renowned authority on Imaging Technology and Applications (now with Chronocam). “The company believes that when used in range meters, it offers good immunity from the effects of background light, especially direct sunlight. Current optical sensors are often “blinded” by direct sunlight, an effect that likely led to some recent, highly publicized, vehicular accidents that occur while using Advanced Driver Assistance System (ADAS) cameras or when utilizing autonomous driving functions, and which hinders the adoption of autonomous driving altogether.”

Jaffard added, “ADAS and vehicle perception, autonomous vehicles and aerial drones are hot and emerging fields. ActLight’s ToF technology may be perfectly suited to supplement existing optical and computer vision technologies, thus help overcoming the hindrance of direct sunlight and other optical artefacts.”

“Our technology offers our partners some unique competitive advantages when compared to existing Avalanche Photo Diodes (APDs) and Single Photon Avalanche Diodes (SPADs),” stated Serguei Okhonin, CEO of ActLight, “these include low operation voltage, simplified electronics (due to high internal gain, there is no need for any amplifier as we directly interact with CMOS circuits), smaller footprint, and of course, lower price, making it perfectly suited for applications ranging from miniaturized wearable devices all the way to autonomous vehicles and areal drones. Our company is actively engaging with leading 3D imaging and silicon companies in evaluation and integration of our technology into their next generation sensors”.

"The healthcare industry is becoming more reliant on new methods to monitor and treat patients. This - along with an increased interest in fitness and wellness - has necessitated more affordable, precise, wearable sensing options," commented Jakob Nielsen, Senior Manager Consumer Health Product Line at ON Semiconductor. "Today's smartwatches and wearables fall short of meeting customer requirements when it comes to heartrate measurement precision and application battery life time. Working with ActLight we will help to bring next generation heartrate sensors to the market that will address these requirements and deliver a full sensing solution for integration in a multitude of MedTech and consumer electronics applications."

"Our technology offers unique competitive advantages to our partners when compared to existing Photo Diodes used in wearable heartrate solutions," stated Serguei Okhonin, CEO of ActLight. "These include lower power consumption, simplified electronics and smaller footprint making it perfectly suited for miniaturized wearable devices. We are happy to see the support from ON Semiconductor and them sharing the potential of our technology in the area of heartrate sensing."

ActLight's DPD principle is presented in the company's Youtube video:

PRWeb: ActLight also announces a ToF sensor based on its DPD.

“ActLight’s ToF technology is pretty remarkable,” commented Jean-Luc Jaffard, Consultant & Advisor at Red Belt SA and a renowned authority on Imaging Technology and Applications (now with Chronocam). “The company believes that when used in range meters, it offers good immunity from the effects of background light, especially direct sunlight. Current optical sensors are often “blinded” by direct sunlight, an effect that likely led to some recent, highly publicized, vehicular accidents that occur while using Advanced Driver Assistance System (ADAS) cameras or when utilizing autonomous driving functions, and which hinders the adoption of autonomous driving altogether.”

Jaffard added, “ADAS and vehicle perception, autonomous vehicles and aerial drones are hot and emerging fields. ActLight’s ToF technology may be perfectly suited to supplement existing optical and computer vision technologies, thus help overcoming the hindrance of direct sunlight and other optical artefacts.”

“Our technology offers our partners some unique competitive advantages when compared to existing Avalanche Photo Diodes (APDs) and Single Photon Avalanche Diodes (SPADs),” stated Serguei Okhonin, CEO of ActLight, “these include low operation voltage, simplified electronics (due to high internal gain, there is no need for any amplifier as we directly interact with CMOS circuits), smaller footprint, and of course, lower price, making it perfectly suited for applications ranging from miniaturized wearable devices all the way to autonomous vehicles and areal drones. Our company is actively engaging with leading 3D imaging and silicon companies in evaluation and integration of our technology into their next generation sensors”.

Valeo Autonomous Vehicle Guided by LiDAR and Camera

After completing its 13,000 mile hands-off drive across the USA, Valeo autonomous vehicle continues its trip with 13,000 km in Europe.

"The Valeo SCALA laser scanner is the key enabler in the Cruise4U system. The Valeo SCALA laser device scans the area in front of the vehicle and detects vehicles, motorbikes, pedestrians and static obstacles like trees, parked vehicles and guard rails – all with an extremely high level of accuracy. It works during the day and at night, when the car is driving at both high and low speeds. Using the collected data, the scanner creates a map of the environment allowing it to analyze and anticipate events around the vehicle.

On the Hands-Off U.S. Tour, the Valeo SCALA will work with a front-facing camera to scan the environment ahead of the vehicle, detecting any obstacles with extreme precision, and four corner radars that ensure safe lane changing."

"The Valeo SCALA laser scanner is the key enabler in the Cruise4U system. The Valeo SCALA laser device scans the area in front of the vehicle and detects vehicles, motorbikes, pedestrians and static obstacles like trees, parked vehicles and guard rails – all with an extremely high level of accuracy. It works during the day and at night, when the car is driving at both high and low speeds. Using the collected data, the scanner creates a map of the environment allowing it to analyze and anticipate events around the vehicle.

On the Hands-Off U.S. Tour, the Valeo SCALA will work with a front-facing camera to scan the environment ahead of the vehicle, detecting any obstacles with extreme precision, and four corner radars that ensure safe lane changing."

Subscribe to:

Comments (Atom)